Overview of Evaluation Methods for R&D Programs

Overview of Evaluation

Methods for R&D

Programs

A Directory of Evaluation Methods Relevant to Technology

Development Programs

Prepared for

U.S. Department of Energy

Office of Energy Efficiency and Renewable Energy

March 2007

by

Rosalie Ruegg, TIA Consulting, Inc.

Gretchen Jordan, Sandia National Laboratories

Overview of Evaluation Methods for R&D Programs

Overview of Evaluation Methods for R&D Programs

Acknowledgements

This booklet introducing managers to a variety of methods for evaluating research and

development (R&D) programs was completed for the U.S. Department of Energy (DOE) by

Sandia National Laboratories, Albuquerque, New Mexico, USA under Contract DE-AC04-

94AL8500. Sandia is operated by Sandia Corporation, a subsidiary of Lockheed Martin

Corporation. Jeff Dowd of DOE’s Office of Energy Efficiency and Renewable Energy (EERE),

Office of Planning, Budget and Analysis (OPBA) directed the work. Rosalie Ruegg of TIA

Consulting, Inc. was principal author and she was assisted by Gretchen Jordan of Sandia National

Laboratories. Joe Roop of Pacific Northwest National Laboratories contributed the section on

tracking commercialization of technologies. EERE OPBA also acknowledges the guidance of Sam

Baldwin, EERE Chief Technology Officer in the production of this booklet. OPBA also thanks

Yaw Agyeman of TMS Inc. for his review of the booklet and assistance in preparing it for

publication.

Notice

This document was prepared as an account of work sponsored by an agency of the United States

government. Neither the United States government nor any agency thereof, nor any of their

employees, makes any warranty, express or implied, or assumes any legal liability or

responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product,

or process disclosed, or represents that its use would not infringe privately owned rights.

Reference herein to any specific commercial product, process, or service by trade name, trademark,

manufacturer, or otherwise does not necessarily constitute or imply its endorsement,

recommendation, or favoring by the United States government or any agency thereof. The views

and opinions of authors expressed herein do not necessarily state or reflect those of the United

States government or any agency thereof.

i

Overview of Evaluation Methods for R&D Programs

Preface

The aim of this booklet is to provide a starting point for managers to become aware of and access

the best evaluation methods for their needs.

Technology development programs in DOE extensively and successfully utilize peer review to

evaluate research and development (R&D) activities at the project and program levels. In addition

to peer review, R&D Program Managers are encouraged to use other evaluation methods in order

to obtain information on program effectiveness and realized benefits that cannot be provided using

the peer review method.

The potential benefits of periodically doing systematic studies using other R&D evaluation

methods are considerable. Programs could:

• Generate additional important information for use in continuous program improvement

• Document knowledge benefits that are often unaccounted for when communicating

programs’ value to stakeholders

• Document realized market benefits associated with past research successes

• Better answer questions about cost-effectiveness of the longer term research

This booklet provides an overview of 14 evaluation methods that have proven useful to R&D

program managers in Federal agencies. Each method is briefly defined, its uses are explained, its

limitations are listed, examples of successful use by other R&D managers are provided, and

references are given. The examples are for successful applications of the R&D evaluation

methods taken from evaluation reports by organizations such as DOE’s Office of Energy

Efficiency and Renewable Energy, DOE’s Office of Science, the National Science Foundation, the

National Institute of Standards and Technology, and the National Research Council.

The questions a program could ask and answer and the multiple lines of evidence generated by

using a variety of R&D evaluation methods would improve program planning and implementation

and strengthen the defense of programs with OMB and Congress.

ii

Overview of Evaluation Methods for R&D Programs

Table of Contents

Notice ..................................................................................................................................... i

Preface................................................................................................................................... ii

Table of Contents ................................................................................................................. iii

Part I. R&D Evaluation and Its Benefits ....................................................................................1

1.1 Increasing Program Manager Information on Program Performance.............................1

1.2 How this Booklet Can Help You Get the Information You Need...................................2

1.3 Why Use a Variety of Evaluation Methods?...................................................................2

1.4 Use of Evaluation by Federal R&D Agencies ................................................................3

1.5 Determining Your Specific Evaluation Needs ................................................................3

1.6 A Roadmap for Using this Booklet to Broaden Evaluation ............................................5

1.7 Additional Considerations in Evaluation ........................................................................8

Part II. Overview of Selected Research Evaluation Methods.................................................17

2.1 Peer Review/Expert Judgment .......................................................................................18

2.2 Monitoring, Data Compilation, and Use of “Indicators” ..............................................24

2.3 Bibliometric Methods – Counts and Citation Analysis.................................................32

2.4 Bibliometric Methods – Data Mining ...........................................................................43

2.5 Bibliometrics -- Hotspot Patent Analysis......................................................................48

2.6 Network Analysis..........................................................................................................55

2.7 Case Study Method .......................................................................................................61

2.8 Survey Method ..............................................................................................................66

2.9 Benchmarking Method..................................................................................................72

2.10 Technology Commercialization Tracking Method .....................................................76

2.11 Benefit-Cost Case Study .............................................................................................83

2.12 Econometric Methods .................................................................................................93

2.13 Historical Tracing Method ..........................................................................................99

2.14 Spillover Analysis Using a Combination of Methods...............................................103

iii

Overview of Evaluation Methods for R&D Programs

iv

Overview of Evaluation Methods for R&D Programs

Part I. R&D Evaluation and Its Benefits

1.1 Increasing Program Manager Information on Program Performance

R&D program managers are close to the projects and activities that make up their programs. They

typically are able to relate the ins-and-outs and smallest details to others. They work hard to make

their programs succeed. Yet, they may lack information in the form needed to describe and

document the benefits their programs are producing -- particularly in the interim period after their

direct involvement with projects and other program activities ends and in the longer run when

knowledge and/or market impacts are achieved.

Program managers may need to know,

• If their research is being done right (e.g., has high quality and research efficiency)

• If the program’s R&D efforts are focused on the right research areas.

• How program-created knowledge finds varied applications that generate additional benefits

to the nation.

• How collaborations and other activities stimulated by the program have affected the

nation’s R&D capabilities.

• How their programs are providing benefits to the users of resulting energy-saving and

energy-producing innovations.

• How their programs are enhancing energy security by providing alternative energy sources,

protecting existing sources and having options ready for deployment if warranted by

changing circumstances.

• If their past efforts were worth it and if planned new initiatives will be worth it.

Having this information when it is needed is essential to the long-run success of their programs.

Evaluation can equip program managers with the information needed to improve their programs

and to communicate effectively to others the full range of benefits from R&D efforts. The

inability to fully communicate program impacts translates into too few resources for that program.

“The more that those responsible for research can show that they offer value for money, the more

credible the case for increased resources becomes.”

1

The ultimate goal of a technology development R&D manager is to complete research objectives

that lead to successfully commercialized technologies. In addition to that ultimate goal, two other

goals of a successful program manager are: to continuously improve the program and to

communicate effectively to others the benefits of his or her program. These two goals are

incorporated into an icon that is used in Part 2 of the booklet to remind program managers about

how the various evaluation methods presented can be used to meet their goals.

1

Luke Georghiou (Professor of Science and Technology Policy and Management, University of Manchester), 2006.

1

Overview of Evaluation Methods for R&D Programs

1.2 How this Booklet Can Help You Get the Information You Need

This booklet provides a quick reference guide to evaluation methods for R&D managers in the

U.S. Department of Energy’s Technology Development programs.

2

While peer review is the form

of R&D evaluation most frequently used by R&D managers, there are other evaluation methods

which are also useful—particularly for estimating program outcomes and impacts retrospectively.

This booklet provides an overview of 14 evaluation methods that have proven useful to R&D

program managers in Federal agencies. Each method is briefly defined, its uses are explained, its

limitations are listed, examples of its successful use by other R&D managers are provided, and

references are given.

The aim is to provide a starting point for managers to become aware of, identify, and access the

best evaluation methods for their needs. It is not to provide a comprehensive treatment of the

methods or step-by-step guidance on how to do a study using a given method. Rather, the booklet

serves the first step in evaluation—determining the kind of study and method that will best serve

your needs.

R&D managers interested in pursuing an evaluation study that uses one of the methods described

in this booklet can contact evaluation professionals in their organization to get assistance with

planning and organizing the study and selecting a reliable independent evaluator to conduct it.

3

The booklet is organized in two parts. The remainder of this first part provides context for

understanding how to select among the various evaluation methods. It presents tables and

graphics that together serve as a quick reference roadmap to accessing the methods in the second

part. It also presents background information on R&D evaluation. Then, the second part presents

overviews of 14 evaluation methods. The methods described in Part 2 of this booklet may be

extended at a later time as other new and useful evaluation methods for R&D program managers

are identified.

1.3 Why Use a Variety of Evaluation Methods?

The short answer is that it takes a variety of methods to answer different types of project

management questions. Furthermore, use of a variety of methods provides multiple “lines of

evidence” and multiple lines of evidence often deepen understanding and strengthen arguments.

Evaluation is an essential tool for good management practice. It is a tool that not only helps

measure a program’s success, but also contributes to its success. Evaluation helps managers plan,

verify, and communicate what they aim to do, decide how to allocate resources, learn how best to

modify or redesign programs, and estimate the resulting program outputs, outcomes, and impacts.

Evaluation also provides information for accountability: Did we do what we said we would do?

Peer review/expert judgment, for example, helps a R&D manager answer questions about research

quality, relevance, and management. It helps R&D managers learn how to design and redesign

program elements and processes, to select projects, to decide whether to continue or discontinue

projects, and how best to modify the research direction of the R&D portfolio. Network analysis is

2

For example, applied energy R&D programs. Applied research is defined by OMB as the systematic study to gain

knowledge or understanding necessary to determine the means by which a recognized and specific need may be met.

3

In EERE, dedicated evaluation staff are located in Office of Planning, Budget and Analysis (OPBA).

2

Overview of Evaluation Methods for R&D Programs

useful for answering questions about a program’s impact on collaborative research and the

dissemination of knowledge—particularly tacit knowledge. Surveys are useful in answering a

host of questions, such as how satisfied are the program’s customers and how are customers using

program outputs. Citation analysis helps document the linkages between a program’s outputs and

downstream users. Economic case studies can estimate the benefits and costs of program outputs,

including those measurable in monetary terms and those more difficult to measure such as

environmental effects and energy security effects. Benchmarking can help identify where and

how to make improvements by comparing a program with its counterparts abroad. Econometric

methods can help demonstrate that it was the program that caused an outcome and not something

else. Using these and other methods can help a program manager better understand and manage

his or her program so as to achieve its goals, and obtain results needed to communicate

achievements to others.

1.4 Use of Evaluation by Federal R&D Agencies

Use of research evaluation in R&D programs—including multiple evaluation methods—is

widespread among public science and technology agencies. This was demonstrated in 2002, in a

benchmarking workshop in the U.S sponsored by TEKES, the national technology agency of

Finland, which compared the evaluation efforts of five U.S. science programs or agencies—

National Science Foundation (NSF), National Institute of Health (NIH), the Department of

Energy’s (DOE’s) Office of Science, DOE’s EERE, and the National Institute of Standards and

Technology’s (NIST’s) Advanced Technology Program (ATP)—as well as science programs in

Canada, Israel, and Finland. The workshop compared these R&D programs in terms of drivers of

evaluation, evaluation methods used, obstacles encountered, and other aspects. Table 1-1 shows a

benchmarking comparison of the diversity of evaluation methods reportedly used by these

organizations as of 2002. You may notice there are opportunities for DOE applied R&D programs

to take advantage of the full range of evaluation methods commonly found useful by research

programs in Federal agencies.

1.5 Determining Your Specific Evaluation Needs

The key question to ask yourself is “Who needs to know what about my program and when?”

You and other program staff are one audience. Senior DOE managers and external parties such as

OMB and Congress are among the other audiences. Generally speaking, program managers are

interested in information about progress and how to improve programs, while senior managers,

OMB staff, and members of Congress are more interested in program outcomes and impacts that

can be attributed to a policy and to questions such as “was it worth it?” In DOE’s EERE, the

current multi-year planning guidance suggests that the program manager have an evaluation

strategy that lays out a plan for answering the most important questions for both types of

audiences over a period of years.

3

Overview of Evaluation Methods for R&D Programs

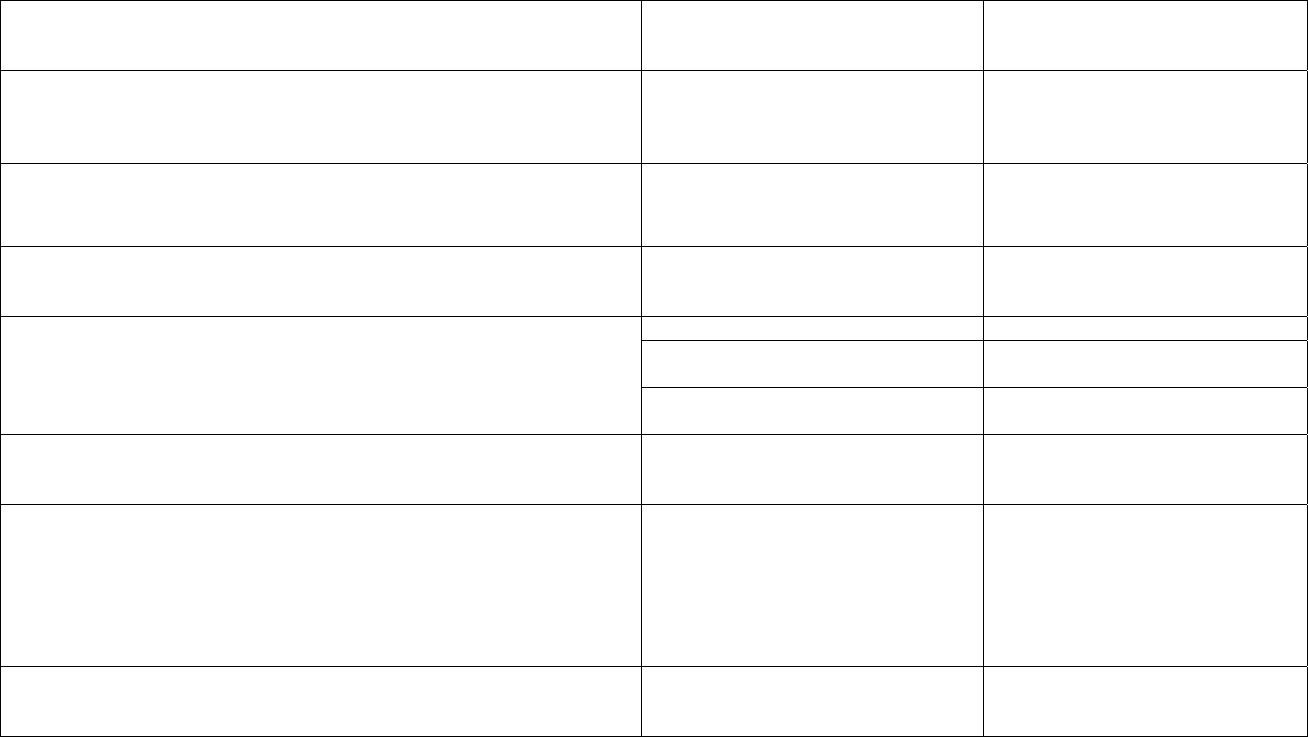

Table 1-1. Methods of Evaluation Used by the Participating Programs

Methods Used NSF NIH DOE/

OS

DOE/

EERE

4

ATP Tekes IRAP

Surveys X X X X X X X

Case Study/Impact

Analysis

X X X X X X X

Expert Panels, Peer

Review, & Focus Groups

X X X X X

Indicator Metrics X X X X X

Bibliometrics X X X X

Historical Tracing X X X

Econometrics X X X

Benchmarking X X X X X

Network Analysis X X

Scorecard X X X

Mission/Outcome

Mapping

X

Options Theory X

Foresighting X

Composite Performance

Rating System

X

Cost-index method X

Market Assessment X

Source: Workshop Proceedings, 2002.

Note: Methods used were not identified for Israel’s MAGNET program, and this tabulation likely understates the use

of methods by Canada’s IRAP.

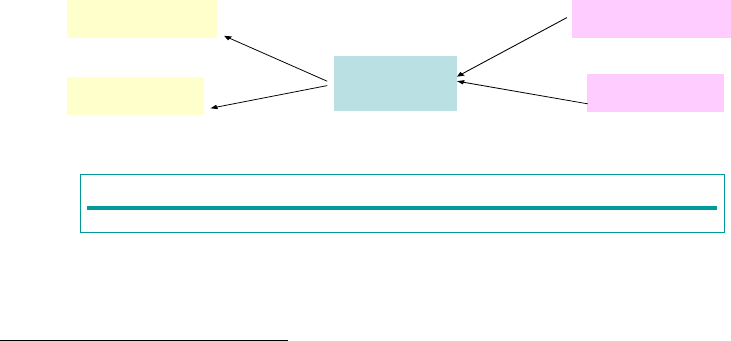

The big questions that require answers can be shown in a very simple diagram of the logic of

publicly funded R&D programs, such as that shown in Figure 1-1.

Before defining specific questions, we recommend you review your program’s detailed logic with

evaluation in mind (or prepare a logic model if you do not already have one). The review can help

you identify the most pressing questions and the audiences for the answers.

As the high-level depiction of Figure 1-1 suggests, some questions important for program

management occur early in the process, some during the interim period, and others further

downstream. Early in the chain, for example, a program manager may wish to track outputs and

assess the formation of research relationships using bibliometric and network analysis methods.

Later, he or she may wish to conduct a survey to determine industry awareness and use of program

outputs. Descriptive case studies may be useful in understanding better the path by which a

particular program innovation is adapted by industry and identifying specific barriers that may

need to be overcome. A hotspot patent analysis can show whether the patents issued by program

researchers are among those heavily cited by others, indicating a burst of interest in the technology

area. Farther out, a historical tracing study may tie program research to important industry

developments, and an economic cluster study may help quantify dollar benefits of the program’s

research in a given field. Also farther out, an econometric study may be desired to measure the

program’s contribution to improvements in the nation’s fuel efficiency and to the environment. A

DOE’s EERE is primarily an applied research program.

4

4

Overview of Evaluation Methods for R&D Programs

broadly cast benefit-cost study may help to capture a variety of effects, including option benefits

that provide protection in the face of possible future developments.

A program’s “outcomes” and “impacts” are influenced by many factors beyond a program’s

control such as private-sector use of the program’s outputs, domestic and foreign investment in

competing technologies, market prices—such as prices for fuels and other technologies, public

policies, laws, and regulations, as well as other factors. Hence, impact evaluations must consider

the roles of important external factors on a program’s results.

1.6 A Roadmap for Using this Booklet to Broaden Evaluation

The program manager’s questions, such as those identified in short-hand form in Figure 1-1 in the

context of the high-level R&D Logic Model, drive the choice of evaluation methods. In fact, the

variety of recognized evaluation methods have evolved as evaluators have developed ways to

address the principal kinds of questions commonly asked by program managers and policy makers.

The methods provide their answers using different units of measures, and the desired unit of

measure can be an important factor in choosing among the methods. For example, the question

posed may ask for statistical measures, best provided by the survey method. The question may

ask for numbers of publications or patents, best provided by the bibliometrics methods (counts), or

evidence of dissemination of knowledge, best provided by citation analysis or network analysis.

The question may ask for financial measures, such as present-value net benefits or rate of return

on investment, best provided by economic methods. The question may ask for descriptive and

explanatory information or it may probe for understanding of underlying factors, best provided by

case studies.

Table 1-2 summarizes seven sequential steps to help R&D Managers get started answering

important questions to help achieve program technical and management goals, including a step to

guide them to choose the evaluation method(s) to meet their specific needs.

Table 1-2. Summary Steps for Achieving Program Manager Goals through Evaluation

Step 1 Consult the performance logic diagram shown in Figure 1-1 and identify the phase of the

program performance cycle on which you wish to focus.

Step 2 Go to Tables 1-3 through 1-6 and find the one for the selected phase of the program

performance cycle.

Step 3 Find within column 1 of the table a question or questions that you would like to have answered.

Step 4 Within the same table, go to column 2 to identify the recommended evaluation method and note

the number in parentheses. (No.) gives listing order of methods in Part 2 of this document.

Step 5 Within the same table, go to column 3 and confirm that the recommended method will provide a

type of measure that will likely meet your need.

Step 6 Go to Part 2 of the booklet and find the write-up for the recommended method.

Step 7 After learning more about the method, read Section 1-7, “Additional Considerations.” Then

consult with evaluation staff in your organization for further assistance and begin working with

an independent evaluator to proceed with a study.

5

Overview of Evaluation Methods for R&D Programs

A series of four tables — Tables 1-3 through 1-6, used in conjunction with the R&D Logic Model

in Figure 1-1 — guide program managers to choose the evaluation method(s) to meet their specific

needs. The tables correspond to four distinct phases of the program performance cycle.

5

Table 1-

3 starts with Phase 1, the designing/revising, planning, selecting, and budgeting phase of the

program performance cycle. Table 1-4 moves to Phase 2, the phase during which R&D progress

is made, process mechanisms are implemented, and program outputs are achieved. Table 1-5

continues to Phase 3, when the outputs are disseminated, technologies are handed off to potential

user, and knowledge is acquired by others, during which time the program managers watch for

interim outcomes. Table 1-6 shows what happens in Phase 4 and beyond, during which time

longer term outcomes and impacts occur, including energy savings, improvements in energy

supply, environmental effects, energy security benefits, technology options that may be needed

under changing conditions, and knowledge benefits resulting in new and improved products in

other industries.

Each of the four tables lists in its first column questions a program manager is likely to encounter

during the specified phase of the program performance cycle. Though not exhaustive, the

questions listed indicate the kinds of performance questions that are typically asked during each

phase. If you, the program manager, do not find your question phrased exactly as you would word

it, you should find a question sufficiently similar to allow you to proceed through the Roadmap.

Evaluation methods (identified by name and number) that are used to answer each question are

listed in the second column of the tables, linked to the questions. In turn, the types of measures

associated with each method are listed in the third column, linked to the methods and questions.

Several of the methods and measures occur multiple times because they are useful for answering

more than one question. Several of the questions occur more than once because they may need to

be revisited as a program progresses.

Figure 1-2 links the four phases of the program performance cycle back to the program manager’s

goals and lists information provided by different evaluation methods to help meet those goals.

This figure is incorporated into an icon used in Part 2 to assist the program manager in selecting

the right method for his or her purpose.

5

It is recognized that the innovation process is nonlinear, but from the perspective of the program manager, it is

convenient to portray the program performance cycle as having linear elements.

6

Overview of Evaluation Methods for R&D Programs

Figure 1-1. Basic Logic of R&D Programs and Evaluation Questions

Relevant Questions at Each Phase

Phases Key Metrics

Performance Assessment Questions Span the Performance Spectrum

Quality, Relevance

Technical Progress, Technology

Interim/ Diffusion

Ultimate

Management

R&D Infrastructure Output Goal, Hand off

Outcomes

Outcomes

(1)

Design/revise,

plan, select,

fund, manage

R&D

(2) R&D progresses,

processes

reviewed, outputs

achieved

(3) Outputs

disseminated,

interim outcomes

achieved

(4) Industry

commercialization,

knowledge spillovers,

system capacities

Market

acceptance of

technology

Benefits

Program Performance Cycle

(See Tables 1-3 – 1-6 for detailed questions)

Relevance?

Progress?

Users?

Timeliness?

Quality?

Importance of?

Partners?

Participants?

Relationships?

Technologies?

Processes?

Commercialized?

Why these?

Knowledge

Influencing factors?

Alignment?

outputs?

Details of progress?

Risk?

Other outputs?

Spillover indicators?

Why?

Vs. targets?

Cost?

Program

Past cost?

productivity?

Adequacy?

Past benefits?

Expected

benefits?

Processes?

Further commercial progress?

Realized benefits and costs?

Attributed program effects?

Links from noteworthy innovations to R&D?

Spillover effects?

Was it worth doing?

[Source: Gretchen Jordan, SNL]

7

Overview of Evaluation Methods for R&D Programs

Figure 1-2. Program Manager Goals, Phases of Program Performance, and Evaluation Information

Provided by Evaluation Methods.

6

Program Manager Goals:

• Improve Program

• Communicate why the program is worth doing

Four Phases of Program Performance Cycle:

1. Design/revise, plan, select, budget

2. Make R&D progress, review processes, achieve outputs

3. Disseminate outputs, achieve interim outcomes

4. Commercialization, market acceptance, energy savings,

energy security, other outcome s and impacts

Information Provided by Evaluation Methods

a

:

• Planning information

b

• Indicators of interim progress

• Analysis of collaborative and other relationships

• Creation and dissemination of knowledge outputs

• Energy savings, economic, environmental, energy security, option and other

benefits, and benefit-cost measures

c

• Spillover effects

• Comparative standing

d

• Overview – was it worth it?

e

1.7 Additional Considerations in Evaluation

Beyond identifying the questions to be addressed and the evaluation method(s) to be used, there

are additional considerations in undertaking evaluation studies. Important among these are the

level of effort to be employed; the design requirements of the study; whether the focus is an

individual project, a program, a portfolio of projects or programs, a system, or an organization;

whether the evaluation is to be performed retrospectively or prospectively; and identifying the

audiences for evaluation results. Each of these considerations is discussed briefly in turn.

6

Some items in the framework – labeled in alphabetical order – require clarification. The clarifications are as

follows: (a) The types of information listed are broad categories to which a variety of methods typically can

contribute. For example, the survey method has been used to contribute to most, if not all, the informational

categories shown, as has the case study method. Similarly, both methods have been used in all or most phases of the

program performance cycle. However, when the figure is used as an icon in Part II, the purpose is to highlight for

each method the principal type(s) of information it generates and the principal phases in which it is used; (b)

“Planning information” as used here encompasses a wide range of different types of information, including increased

understanding of program dynamics and transformational processes, assessment of technical risks, budget analysis,

estimates of user needs and satisfaction, and other information that bears on the operational design of a program; (c)

“Benefit-cost measures” encompass net present value measures, benefit-to-cost ratio measures, and rate of return

measures, including private returns, social returns, and returns attributed to the public investment; (d) “Comparative

standing” refers to how a program compares with other programs in terms of selected dimensions, for example, the

size and growth rate of their research budgets, the educational attainments of their employees, their R&D outputs, and

their productivity in generating outputs; (e) Overview judgments of a program’s worth generally draw on a larger

body of information compiled through the use of a variety of evaluation methods.

8

Overview of Evaluation Methods for R&D Programs

Level of Effort: The amount of time and resources to be put into an evaluation study can vary

depending on the analytical challenges faced, the method(s) used, the complexity of the study

design, the data existing and needing to be compiled, the intended use of the results and related

need for the study to be carefully researched, documented, defensible, and publishable, and, of

course, the program resources available for the study. After a program manager has identified the

question(s) to be answered, the intended use of the study, and the audience for the results, a study

plan can be developed and study costs estimated based on the method(s) to be used and the desired

features of the study.

Study Design: How a study is designed is dependent on the type of question asked. Three

common types of questions are (1) descriptive questions, (2) normative questions, and (3) impact

or cause and effect questions.

7

Descriptive questions are generally the easiest to address. These are the what, why, who, how,

and how much or how many questions. For example, we may wish to know how many papers

were published from 1995 through 2005 by a program. The answer requires a simple count of

published papers. Suppose we wish to know what connections exist between a particular

government lab, other government labs, universities, and company labs. A network analysis can

show the linkages among these organizations. Suppose we want to know who developed a

technology and why. The descriptive case study method can tell the story of the developers, their

motivations, and critical aspects of the development.

Normative questions are asked when we have a standard, goal or target and we want to know how

actual outcomes compare against the standard or goal. Answering this kind of question is also

relatively straight-forward. The way the goal or target is expressed determines the method used to

answer normative questions. For example, a program goal may be to achieve at least an 85%

customer satisfaction rating. Thus, the relevant question is did the program meet its goal of

achieving at least an 85% customer satisfaction rating—a question that can be answered using the

survey method.

Impact questions require more attention to study design, because the evaluation needs to show not

only that an effect can be observed but also that the program in question caused it to happen—

although with R&D it is often feasible to show “contribution” rather than strict causality. For a

non-R&D example of the challenge of showing causality, consider a program that aims to increase

jobs. The program is implemented and employment increases. Was it the program or changes in

the business cycle independent of the program that is responsible for the increase? For an R&D

example, suppose a program seeks to increase fuel efficiency by developing a new type of engine.

7

The discussion of types of questions and formulation of study design is based on material from an on-line course on

evaluation described by Bill Valdez, Bill Eckert, Padma Karunaratne, and Rosalie Ruegg in a presentation at the 2005

annual meeting of the American Evaluation Association, Toronto Canada, October 2005.

9

Overview of Evaluation Methods for R&D Programs

Table 1-3. Phase 1 of Program Performance Cycle: Designing/Revising, Planning, Selecting, and Budgeting

Relevant Questions Methods for Answering

Questions

(No.) gives listing order in

Part 2

Types of Measures Given

What are the relevancy and timeliness of this program or initiative? Would it make

sense to delay it until more fundamental work on enabling technologies is

completed? What are the factors that endanger it?

(2-1) Peer review/Expert

judgment in support of

strategic planning,

selecting, and budgeting

Judgment

Critiques

Recommendations

Who are your partners, and how much are they contributing to the effort?

What technologies (or other outcomes) do you expect to deliver, and when?

How did you come to select the technologies/approaches you are using in pursuit of

the program or initiative?

How do planned projects or activities support planned program or initiative

objectives?

Does the innovativeness (technical risk level) of the planned R&D program meet

acceptable levels?

Why do you think the technology will work?

How much will the program/initiative cost? How did you come to this cost estimate?

What is the likelihood that this amount will be sufficient to achieve the goals?

How much has been spent thus far? Does the progress achieved thus far match

expectations based on those expenditures?

(2-11) Benefit-cost analysis

-- retrospective

Economic, knowledge,

environmental, &

security benefits

What additional benefits are expected from the new program or initiative relative to

its additional costs?

(2-11) Benefit-cost analysis

-- prospective

Economic,

environmental, &

security benefits

Are program mechanisms, processes, and activities appropriate to achieve program

or initiative goals? How are resources to be transformed into desired outputs and

(2-1) Peer review/Expert

judgment

Judgment

outcomes? How can the transformational processes be strengthened?

(2-7) Case study Qualitative explanations

(2-12) Econometric studies Quantitative functional

relationships

Why do you think the planned efforts will yield the results you are seeking? What

confidence do you have in our ability to deliver the desired outcome? Why?

(2-1--2-14) All methods Past and predicted

performance results

from multiple studies

(see tables 3-5)

10

Overview of Evaluation Methods for R&D Programs

Table 1-4. Phase 2 of Program Performance Cycle: Making R&D Progress, Reviewing Process Mechanisms and Achieving Outputs

Relevant Questions Methods for Answering

Questions

(No.) gives listing order in Part 2

Types of Measures Given

Are we making technical progress as planned? (2-2) Monitoring: comparing

progress against technical

milestones

Comparison of technical

achievements against targets

Is the program’s research of high scientific quality? Is it relevant,

productive, and well managed?

(2-1) Peer review/Expert judgment Judgment

Who is participating? In what roles? What relationships are

developing? Is the program strengthening the research network?

(2-6) Network analysis

Before-and-after applications are

recommended

Diagram showing connections

among research entities

How are program mechanisms, processes, and/or activities working?

How can they be strengthened?

(2-2) Monitoring activities Indicators

(2-7) Case study --

descriptive/exploratory

Qualitative explanations

(2-12) Econometric studies Quantitative functional

relationships

What are the program’s codified knowledge outputs? 2-3) Bibliometrics – counts Number of papers

Number of patents

What are other outputs of the program?

Do they match

expectations?

(2-2) Monitoring outputs Indicators, e.g.,

Number of research prototypes

Number of processes

Number of algorithms

Number of students trained

Comparisons of achieved

outputs against targets

How does the program’s output productivity compare with similar

programs?

(2-9) Benchmarking Comparison of units of outputs

per resource input among

programs

11

Overview of Evaluation Methods for R&D Programs

Table 1-5. Phase 3 of Program Performance Cycle: Output Dissemination and Achievement of Interim Outcomes

Relevant Questions Methods for Answering

Questions

(No.) gives listing order in

Part 2

Types of Measures Given

Who is using the program’s knowledge outputs? To what extent? (2-3) Bibliometrics – citation

analysis

Citations of publications

Patent citation trees

How noteworthy are the resulting patents? What are the hot trends?

Are there important regional impacts?

(2-5) Hot-spot patent analysis Relative frequency of citations

What role did the program play in initiating research in this area? (2-4) Bibliometrics – data

mining

Growth in use of keywords in

documents over time &

program’s contribution

What additional project-related relationships have developed among

researchers? Among others, such as commercializers and users?

(2-6) Network analysis

Before-and-after applications

are recommended

Diagram showing connections

among related entities

To what extent have the program’s outputs been commercialized?

(2-2) Indicators

(2-10) Technology

commercialization tracking

Number of outputs

commercialized

Stage of commercialization

Extent of commercialization

What factors are influencing industry’s adoption/lack of adoption of the

program’s technologies?

(2-7) Case study --

descriptive/explanatory

Narrative and data

List of factors

How long is it taking to first sales? How much is being realized in annual

revenue? What are related employment effects?

(2-8) Survey Statistics

What are the realized benefits and costs of the technology to date? What

share of net benefits from the technology are attributed to the program?

(2-11) Benefit-cost analysis Net present value benefits

with and without the program

Rate of return

What evidence is there of spillovers from the R&D? (2-14) Spillover analysis Indicators of spillovers

How is the program working thus far? (2-7) Case study –

descriptive/explanatory

Narrative and data

12

Overview of Evaluation Methods for R&D Programs

Table 1-6. Phase 4 of Program Performance Cycle and Beyond: Commercialization, Market Acceptance, Outcomes and Impacts

Relevant Questions Methods for Answering Questions

(No.) gives listing order in Part 2

Types of Measures Given

To what extent has commercialization been achieved? (2-10) Technology commercialization

tracking

(2-8) Survey

Stage of commercialization and

extent of commercialization

Statistics on commercial

achievements

What are the realized benefits and costs of the program or

initiative?

(2-11) Benefit-cost analysis --

retrospective

Economic, knowledge,

environmental, & security

benefits

What effect has the program or initiative had on residential

energy efficiency? On commercial energy efficiency?

(2-8) Survey

(2-12) Econometric method

Correlation results

Production functions

Are there one or more noteworthy innovations that can be

shown to link back directly to the program’s research?

(2-13) Historical tracing (including

citation analysis)

Documented path linking

downstream innovation to

upstream R&D

Is there evidence that knowledge spillovers (use of research

results beyond planned uses) have occurred?

(2-3) Bibliometrics – citation analysis

(2-6) Network analysis

Citations of publications

Patent citation trees

Diagram showing connections

among research entities

What are the spillover effects for consumers and producers in

the target industry and in other industries from the program’s or

initiative’s technologies and knowledge outputs?

(2-14) Spillover analysis Consumer surplus

Producer surplus

Knowledge spillovers

Network spillovers

How does the program compare with counterpart programs? (2-9) Benchmarking Comparisons among programs

on selected parameters

If we had it to do all over again, would we have launched the

program or initiative?

(2-1) Peer review/expert judgment

supported by multiple retrospective

evaluation methods (2-3--2-14)

Comparison of retrospective

evaluation results against

original program/initiative

expectations

13

Overview of Evaluation Methods for R&D Programs

Then fuel efficiency increases. Was it the government research program that caused the efficiency

improvement, or was it something else, such as private-sector R&D?

To establish cause-and-effect conditions, an evaluation study needs, first, a logical theory that

explains why a causal relationship makes sense. Second, it needs the cause and the effect to

follow a logical time order, such that the program precedes the observed outcome. Third, it needs

to ensure that the condition of co-variation is met, i.e., the outcome has the ability to change as the

program’s intervention is applied. And, fourth—and most difficult, the evaluation needs to

eliminate rival explanations for the observed changes.

Approaches to help establish causality include before and after comparisons; use of control groups

with random assignment; application of statistical/econometric techniques to eliminate rival

explanations when comparison groups do not include random assignment; and use of

counterfactual questions of participants to try to assess what would have happened if the program

had not existed.

Study Focus on Project, Program, or Beyond: It should be noted that with the exception of

benchmarking, the methods presented are intended for use within the scope of a given program to

evaluate individual projects, related collections (or portfolios) of projects, or, in some cases, a

program as a whole. At this time the state-of-the-art of evaluating collections of research

portfolios across multiple programs and organizations is limited and under development.

Retrospective versus Prospective Evaluation: Retrospective evaluation takes a look back at

past accomplishments. It is based on empirical data. Prospective evaluation projects what is

expected to happen in the future. Prospective evaluation is performed to forecast results of a

decision too recent to have generated empirical data. Prospective evaluation is characterized by

more uncertainty than retrospective evaluation—uncertainty about the technical outcome of a

project or program, uncertainty about market acceptance of the technical outcome, and uncertainty

about future “states of the world” that may affect demand and supply conditions. Hence, the

results of prospective evaluation tend to be more uncertain than the results of retrospective

evaluation.

Communicating Evaluation Results to Different Audiences: The program manager will be a

prime audience for results of an evaluation study, but there are other “stakeholders” who also may

be interested in evaluation results. To reach other stakeholders, to address their specific needs,

and to communicate to them the relevant findings, the R&D program staff can help develop an

“evaluation results” communications plan.

References

Luke Georghiou, “What Lies Beneath…Avoiding the Risk of Undervaluation,” presentation

presented at the conference, New Frontiers in Evaluation, Vienna, April 24-25, 2006Fteval.

(Available online at www.fteval.at/papers06 by selecting “plenary sessions” and number 3.)

14

Overview of Evaluation Methods for R&D Programs

McLaughlin, John A., and Jordan, Gretchen B., “Chapter 1: Logic Models,” in Handbook of

Practical Program Evaluation, 2

nd

Edition, Wholey, J., Hatry, H., and Newcomer, K., Eds.,

Jossey-Bass, 2004.

Rosalie Ruegg, edited, “Benchmarking Evaluation of Public Science and Technology Programs in

the United States, Canada, Israel, and Finland,” Proceedings of a Workshop, TEKES--National

Technology Agency of Finland, Embassy of Finland, Washington, DC, September 25, 2002.

Rosalie Ruegg and Irwin Feller, A Toolkit for Evaluating Public R&D Investment, NIST GCR 03-

857 (Gaithersburg, MD: National Institute of Standards and Technology, July 2003), “Part I,

Evaluation Framework,” pp. 13-53.

U.S. Office of Management and Budget, “What Constitutes Strong Evidence of a Program’s

Effectiveness?” Supporting Materials/References for Program Assessment Rating Tool (PART

Guidance, 2004), Executive Office of the President, accessed January 2006 at

www.whitehouse.gov/omb/part/2004_program_eval.pdf.

15

Overview of Evaluation Methods for R&D Programs

16

Overview of Evaluation Methods for R&D Programs

Part II. Overview of Selected Research Evaluation Methods

Each of fourteen evaluation methods is described in sections that comprise Part 2, the heart of the

booklet. The treatment of each includes:

a definition of the method and what it has to offer the program manager;

an overview of how the method is organized, conducted, and analyzed;

limitations of the method;

practical uses of the method; and

examples.

The examples are for successful applications of the R&D evaluation methods taken from

evaluation reports by organizations such as DOE’s EERE, DOE’s Office of Science, the National

Science Foundation, the National Institute of Standards and Technology, and the National

Research Council. Note that each example is a brief synopsis taken from a study—in many cases

a quite lengthy and detailed study. In order to adhere to the booklet’s aim of providing a quick

reference and overview, many details of the source studies are omitted. However, references are

provided at the end of the presentation of each method for those who wish to delve further into the

examples. Many of the full reports from which the examples are drawn are available on-line for

easy access.

The methods are presented in the order listed. Their numbers (in parenthesis) refer to the sections

that follow, and they are also keyed to the series of questions presented in Tables 1-3 through 1-6.

As indicated in the tables, most of the methods are used to answer questions in more than one

phase of the cycle. An icon (based on Figure 1-2) at the top of each section alerts the program

manager to the phase or phases of the program performance cycle in which the method will likely

be most useful and highlights the type of information it will provide.

(2-1) Peer Review/Expert Judgment

(2-2) Monitoring, Data Compilation, and Use of Indicators

(2-3) Bibliometrics – counts and citation analysis

(2-4) Bibliometrics – data mining

(2-5) Bibliometrics – hotspot patent analysis

(2-6) Network Analysis

(2-7) Case Study Method – Exploratory, Descriptive, and Explanatory

(2-8) Survey Method

(2-9) Benchmarking Method

(2-10) Technology Commercialization Tracking Method

(2-11) Benefit-Cost Case Study

(2-12) Econometric Methods

(2-13) Historical Tracing

(2-14) Spillover Analysis

Again, it should be kept in mind that the field of R&D evaluation is still developing. Additional

methods and techniques may be added to this booklet as they are developed, tested, and found

useful to R&D managers.

17

Overview of Evaluation Methods for R&D Programs

2.1 Peer Review/Expert Judgment

Program Manager Goals:

• Improve Program

• Communicate why the program is worth doing

Four Phases of Program Performance Cycle:

1. Design/revise, plan, select, budget

2. Make R&D progress, review processes, achieve outputs

3. Disseminate outputs, achieve interim outcomes

4. Commercialization, market acceptance, energy savings,

energy security, other outcome s and impacts

Information Provided by Evaluation Methods:

• Planning information

• Indicators of interim progress

• Analysis of collaborative and other relationships

• Creation and dissemination of knowledge outputs

• Energy savings, economic, environmental, energy security, option and other

benefits, and benefit-cost measures

• Spillover effects

• Comparative standing

• Overview – was it worth it?

[Goals, phases, and information provided by this method are highlighted]

Peer review/expert judgment is a relatively low-cost, fast-to-apply, well-known,

widely accepted, and versatile evaluation method that can be used to answer a

variety of questions throughout the program performance cycle, as well as in

other applications. It is used, for example, for support of strategic planning

decisions, selecting among projects and programs, for in-progress project and

program review, for process assessment, for stage-gate decisions, for merit

review of papers for publications, and for making judgments about diverse

topics, including—when supported by results from application of other

methods—the overall success of a program. It is widely used by industry,

government, and academia. In practice, it ranges from a formal process

conducted according to strict protocol to an informal process.

Definition: Peer Review/Expert Judgment is qualitative review, opinion, and advice from experts

on the subject being evaluated, based on objective criteria. The method combines program

performance information (provided to the experts) with the many years of cumulative experience

of the subject-matter experts, and focuses that informed expertise and experience on addressing

key questions about a program, initiative, project, proposal, paper, topic, or other subject of focus.

While information from other sources, including other methods of evaluation, may provide

influential evidence, the ultimate conclusions about performance are based on the judgment of the

experts.

18

Overview of Evaluation Methods for R&D Programs

EERE’s Peer Review Guide (2004) defines in-progress peer review as:

A rigorous, formal, and documented evaluation process using objective criteria

and qualified and independent reviewers to make a judgment of the technical/

scientific/business merit, the actual or anticipated results, and the productivity and

management effectiveness of programs and/or projects.

How DOE’s EERE in-progress peer reviews are organized, conducted, and analyzed:

The EERE Peer Review Guide sets out minimum requirements for planning, conducting,

and responding to peer reviews. A primary requirement is that the reviews be independent

both in fact and in terms of public perception. This is achieved through having processes

that are transparent and having third parties involved in the selection of reviewers.

To a

large extent, the quality of the results depends upon the choice of qualified and independent

reviewers. In addition to being experts in the subject matter, reviewers should have no real or

perceived conflict of interest. Their judgments should be guided by the objective evaluation

criteria, established prior to the review, and should address the specific questions established for

the review.

When used to review an individual project or a collection of projects, peer review

generally focuses on the question “are we doing it right?” A program-level review will focus on

the broader issue of “is the program doing the right thing?”

Limitations: The quality and credibility of peer/expert evaluation is highly dependent on the

reviewers/experts selected and the evaluation questions and criteria used by those reviewers.

Reviewers must be very knowledgeable about the subject and free of conflict of interests that

could bias their judgment. The sometimes-expressed view that peer review is an “old boys club”

must be avoided. Steps may be needed to calibrate reviewer ratings. Defining appropriate criteria

may be problematic when the work being reviewed is highly innovative. Peer review panels are

dependent on sound and detailed information on which to base their judgments about a program’s

progress or impact, and they are vulnerable to poor and insufficient information. The type of data

needed for retrospective impact assessment cannot be created in an expert review panel format.

For this reason, peer review tends not to be appropriate for evaluating impacts of programs --

except if a peer review panel is provided substantial, reliable results from impact studies based on

other methods, and serves the function of integrating results across multiple studies.

Uses:

To conduct in-progress reviews of scientific quality and productivity.

To help answer questions about the relevancy, timeliness, riskiness and management of

existing program research activities, and resource sufficiency of new program initiatives.

To score and rate projects under review to aid decisions to continue, discontinue, or modify

existing or planned projects, programs, or program initiatives.

To help assess appropriateness of program mechanisms, processes, and activities and how they

might be strengthened.

To integrate across multiple evaluation results and render judgments about the overall success

of a program or program initiative.

19

Overview of Evaluation Methods for R&D Programs

To provide information to help program managers make decisions to design or revise their

program, re-direct existing R&D funds, or allocate new funds.

Examples

: Two examples are given. The first illustrates DOE’s formal use of peer review for in-

progress review of projects and program. The second illustrates a less formal, less rigorous use of

experts convened as a working group and supported by the results of previously completed studies

and specially commissioned papers, to review and discuss several research questions. It is

provided to suggest the wide range of practice in using “peers” or “experts” for evaluation.

Example 1: Using in-progress peer review to assess the performance of projects in DOE

Hydrogen Program

In the EERE Hydrogen, Fuel Cells, and Infrastructure Technologies Program (HFCIT), research

and other activities performed by industry, universities, and national laboratories are evaluated

annually at the Hydrogen Program Merit Review and Peer Evaluation meeting. Independent

expert panels review the project portfolio in accordance with criteria, which helps guide the

program’s Technology Development Managers in making funding decisions for the new fiscal

year. This review of the HFCIT program is conducted using the process outlined in the EERE

Peer Review Guide. In addition to annual peer review at the project portfolio level, external

reviews are conducted every two or three years by the National Academies (e.g. National Research

Council, National Academy of Sciences), or an equivalent independent group.

8

The program

prepares a formal response to the review recommendations.

Table 2-1 illustrates how peer review results were used by the Hydrogen Program to help inform

decisions on whether to continue or discontinue research projects.

9

Table 2-1 shows a sample

subset of a larger collection of summary results for HFCIT program technical areas in 2003.

Many research projects determined to have very low peer review ratings, as established from a

comparable peer review process applied to all projects in a given subprogram, were discontinued.

A summary of scoring results and Program decisions follows the table.

8

See, for example, The Hydrogen Economy: Opportunities, Costs, Barriers, and R&D Needs, prepared by the

National Research Council (NRC) and National Academy of Engineering. February 2004.

9

FY2003 Hydrogen Program Merit Review & Peer Evaluation Report.

20

Overview of Evaluation Methods for R&D Programs

Table 2-1. Results Summary Table from 2003 HFCIT Program Peer Review Report

Project

No.

Project, Performing Organization Avg.

Score

Conti

nued

Disconti

nued

Comp

leted

Summary Content

10 Low Cost H2 Production

Platform, Praxair

2.95 V Emphasize collaboration.

11 Defect-free Thin Film

Membranes for H2 Separation &

Isolation, SNL

2.87 V

12 Maximizing Photosynthetic

Efficiencies and H2 Production

in Microalgal Cultures, UC

Berkeley

3.33 V Focus on program RD&D goals

for 2005.

13 Reformer Model Development

for Hydrogen Production, JPL

2.27 V Model analysis in this area is no

longer a program requirement.

14 Photoelectrochemical H2

Production, University of

Hawaii

3.30 V Emphasize further development

of multi-junction

photoelectrodes to meet program

RD&D goals for 2005.

15 Photoelectrochemical Water

Splitting, NREL

3.23 V Focus on candidate lighting

materials.

16 Encapsulated Metal Hydride for

H2 Separation, SRTC

2.83 V

17 Economic Comparison of

Renewable Sources for

Vehicular Hydrogen in 2040,

DTI

2.90 V

18 Biomass-Derived H2 from a

Thermally Ballasted Gasifier,

Iowa State University

2.70 V

20 Evaluation of Protected Metal

Hydride Slurries in a H2 Mini-

Grid, TIAX

3.20 V

22 Novel Compression and Fueling

Apparatus to Meet Hydrogen

Vehicle Range Requirements,

Air Products & Chemicals Inc.

3.20 V

30 Techno-Economic Analysis of

H2 Production by Gasification

of Biomass, GTI

2.60 V Project completed.

31 Supercritical Water Partial

Oxidation, GA

2.57 V Unlikely that cost barrier can be

overcome.

32 Development of Efficient and

Robust Algal Hydrogen

Production Systems, ORNL

3.47 V Focus on designing new DNA

sequence coding for proton

channel.

34 Water-Gas Shift Membrane

Reactor Studies, University of

Pittsburgh

2.90 V Emphasize feasibility of hi-temp

water-gas shift under realistic

operating conditions.

38 Low Cost, High Efficiency

Reversible FC Systems,

Technology Management Inc.

2.80 V High electrical input

requirement prevents

overcoming energy efficiency

barrier.

39 High-Efficiency Steam

Electrolyzer, LLNL

2.37 V Carbon deposition at anode is a

recurring problem.

Source: FY2003 Hydrogen Program Merit Review & Peer Evaluation Report

21

Overview of Evaluation Methods for R&D Programs

Peer review scoring results for hydrogen research projects:

• In 2003 there were 56 total hydrogen projects that received a review rating.

• Distribution of scores ranged from 2.2 to 3.68 on a 4-point scale (1.0 to 4.0).

• 8 projects were judged to be “completed.”

• 7 projects were discontinued.

• 6 of 7 discontinued projects were at or lower than the 2.8 rating threshold. They were

discontinued for the following stated reasons:

o Model analysis in this area is no longer a program requirement.

o Project funding was terminated due to poor review.

o Carbon deposition at anode is a recurring technical problem.

o It is unlikely that the cost barrier can be overcome.

o Project funding was terminated pending further review of approach.

• A seventh project had a score of 3.23 but was discontinued for the following reason:

o High electrical input requirement prevents overcoming barrier.

Peer review scoring results for fuel cell research projects:

• In 2003 there were 73 total fuel cell projects that received a review rating.

• Distribution of scores ranged from 1.8 to 3.9 on a 4-point scale (1.0 to 4.0).

• 15 projects were judged to be “completed” or “concluding.”

• 5 projects were discontinued.

• 4 of 5 discontinued projects were at or lower than the 2.8 rating threshold. They were

discontinued for the following stated reasons:

o Project was terminated since other approaches to fuel cell humidification appear to

be more effective.

o Project was halted pending go/no-go decision.

o Project funding was terminated in favor of higher priority R&D.

o Project was terminated since technology is unable to meet technical targets.

• A 5

th

project had a score of 3.04 but a decision was made to set project priorities and focus

future continued work only on a critical element of the research.

Example 2: Using expert judgment informed by supporting studies and papers to examine issues

surrounding public support of technology development

Researchers at Harvard University’s Kennedy School of Government in collaboration with MIT’s

Sloan School of Management and the Harvard Business School used elements of expert review in

conducting a study of barriers to private-sector funding of early-stage, high-risk technology

development projects.

10

This study lacked the formality and rigor of the previous example in

running a peer review process; it did, however, rely on a group of experienced practitioners from

business, finance, and government, together with academic experts, convened in two workshops to

discuss commissioned papers, hear presentation, discuss issues surrounding the management of

technical risks and related funding decisions, comment on the results of supporting studies, and

explore answers to the following questions:

How do industrial managers make decisions on funding early-stage, high-risk technology

projects?

10

Branscomb et al., 2000.

22

Overview of Evaluation Methods for R&D Programs

What external factors, especially those controlled or influenced by government, can

sufficiently reduce the risk factor of projects that appear otherwise to be attractive

commercial opportunities for the firm, so that firms will invest in them and seek their

commercialization?

How can a government program better identify projects that would not be pursued or would

be pursued less vigorously without public support and at the same time are likely to lead to

commercial success—with broad public benefits—with that support?

In attempting to address these questions, the study concluded that there is a serious and widening

gap in sources of support for research projects that fall between concept development and the

research needed to reduce a technology to practice.

References

L. Branscomb, K. Morse, and M. Roberts, Managing Technical Risk: Understanding Private

Sector Decision Making on Early Stage Technology-Based Projects, NIST GCR 00-787

(Gaithersburg, MD, April 2000.)

National Research Council (NRC), The Hydrogen Economy: Opportunities, Costs, Barriers, and

R&D Needs, prepared by the National Research Council (NRC) and National Academy of

Engineering, February 2004.

U.S. DOE EERE, EERE Peer Review Guide: Based on a Survey of Best Practices for In-Progress

Peer Review, August, 2004.

U.S. DOE EERE, FY2003 Hydrogen Program Merit Review & Peer Evaluation Report, 2003.

23

Overview of Evaluation Methods for R&D Programs

2.2 Monitoring, Data Compilation, and Use of “Indicators”

Program Manager Goals:

• Improve Program

• Communicate why the program is worth doing

Four Phases of Program Performance Cycle:

1. Design/revise, plan, select, budget

2. Make R&D progress, review processes, achieve outputs

3. Disseminate outputs, achieve interim outcomes

4. Commercialization, market acceptance, energy savings,

energy security, other outcome s and impacts

Information Provided by Evaluation Methods:

• Planning information

• Indicators of interim progress

• Analysis of collaborative and other relationships

• Creation and dissemination of knowledge outputs

• Energy savings, economic, environmental, energy security, option and other

benefits, and benefit-cost measures

• Spillover effects

• Comparative standing

• Overview – was it worth it?

[Goals, phases, and information provided by this method are highlighted]

Monitoring a program as it is carried out, collecting resulting data, and

generating selected indicator metrics from the data are integral to evaluation.

Pairing monitoring with evaluation is considered good practice. Continuous

monitoring and data collection support evaluation and provide useful interim

indicators of change in key program functions that can guide program

managers in making mid-course corrections.

Definition: Monitoring is a continuous assessment of key program functions organized internally

by program management and carried out on an on-going basis. Monitoring entails setting up a

data collection system for compiling key data on program activities, participants, interim

achievements and outputs. The resulting data can be used to develop interim performance metrics

or “indicators” of program progress, outputs, and outcomes, and are helpful in keeping a program

on track and for guiding mid-course corrections. The data also contribute to evaluation studies.

How monitoring and data collection are organized and conducted: Developing a monitoring

system with data collection and construction of indicators starts with review of the program’s

detailed logic model. From the logic model, it is possible to identify key activities, expected

program participants, expected outputs, and, perhaps, some expected outcomes that are conducive

to monitoring, such as number of technologies under commercialization. A closer look at projects

or research activities that comprise a program or initiative reveals the technical goals, against

which progress can be tracked. After deciding what to monitor, the next step is to establish the

24

Overview of Evaluation Methods for R&D Programs

supporting data collection strategies, databases, and information technology framework. It is

necessary that program management identify which indicator metrics will best provide interim

guidance. Often graphical depictions of the selected indicators are helpful in revealing trends in

key program functions, and in guiding mid-course corrections. When evaluation studies are

launched, the data collected through program monitoring tend to be invaluable. For example,

records of publication and patent outputs are needed to support citation studies. Records of

program participants are a starting point for network studies. Records of funded projects are a

starting point for carrying out case studies. Records of commercial progress are helpful in

organizing economic studies.

Limitations: The success of monitoring depends on appropriate selection of what is monitored.

Moreover, interim indicators of progress are just that; they are not measures of ultimate, achieved

outcomes and impacts. A further complicating factor is that a program often has multiple goals

and it may be difficult to know how multiple indicators inform the multiple goals.

Uses:

To track interim program progress.

To guide mid-course corrections; provide information to help program managers make

decisions to design or revise their program, re-direct existing R&D funds, or allocate new

funds.

To support evaluation studies.

Example: Two examples are given. The first illustrates DOE and EERE performance

monitoring systems that serve as reporting and analysis tools to support EERE R&D planning and

management. The second illustrates a monitoring system used by the National Institutes of Health

(NIH) called the Program Performance Monitoring System (PPMS).

Example 1: EERE CPS, DOE Joule and EERE EIS performance monitoring systems

DOE and EERE have the performance monitoring systems that cover parts of the program

performance spectrum – The EERE Corporate Planning System (CPS), the DOE Joule

Performance Measurement Tracking System, and the EERE Executive Information System (EIS).

The CPS is a reporting system that collects and tracks information about milestone achievements

as well as financial performance (e.g., cost and obligation data). This information is collected and

reported at the project and contracts levels. The CPS provides quarterly detailed tracking of

achievement of project level goals, which are primarily output measures of research/technology

development accomplishments.

Joule is the name of a program performance tracking system that DOE uses to track and validate

programs’ performance measures. Joule tracks progress toward program goals and important

accomplishments that are stated as official program R&D targets in the reports to Congress and

the OMB. Joule performance measures for R&D (and non-R&D activities, as well) are

incorporated in the annual Congressional budget justifications because doing so encourages

budget and performance integration (required by the Presidential Management Initiative, PMI).

25

Overview of Evaluation Methods for R&D Programs

Joule performance measures tend to be annual output measures and sometimes include interim

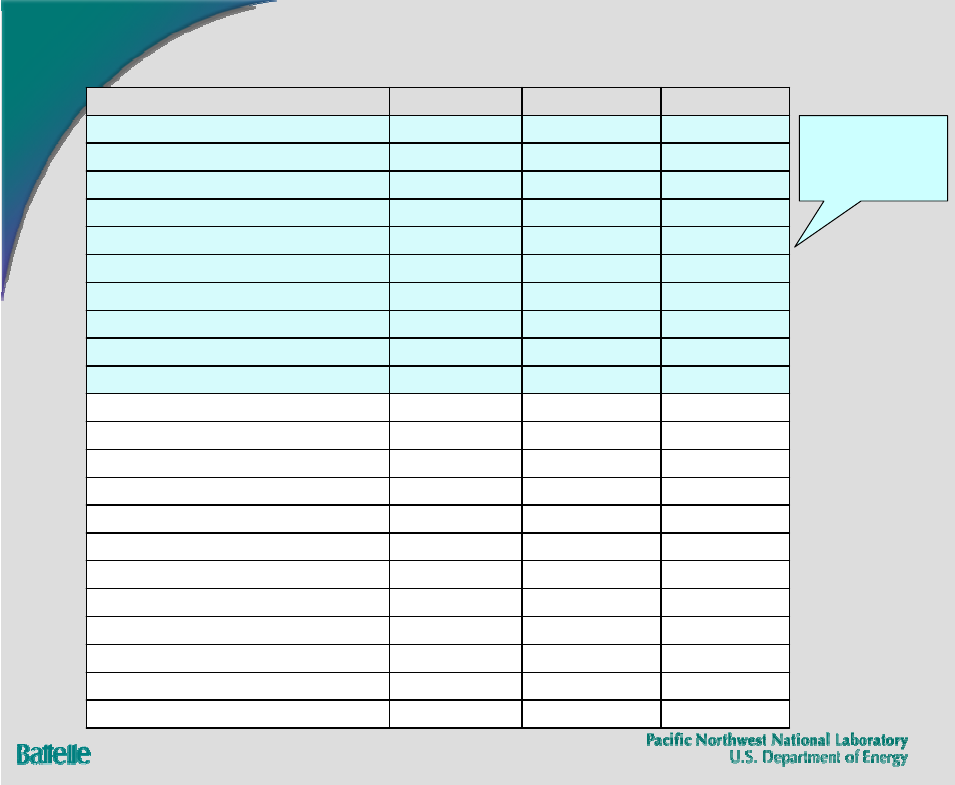

outcomes. The measures are defined at the project or project portfolio levels. Table 2-1 provides

an example of annual Joule performance targets reported at the project portfolio level for

Photovoltaic R&D in the EERE Solar Energy Program.

Table 2-1. Joule Performance Measures for Solar Photovoltaic Energy Systems Research

FY 2002 Results FY 2003 Results FY 2004 Results

Photovoltaic Energy Systems

Reduce the manufacturing

cost of PV modules to $2.25

per Watt (equivalent to a

range of $0.20 to $0.25 per

kWh price of electricity for

an installed solar system).

[MET]

Reduce manufacturing cost of

PV modules to $2.10 per

Watt (equivalent to a range of

$0.19 to $0.24 per kWh price

of electricity for an installed

solar system). [MET]

Verify, with standard

laboratory measurements,

U.S.-made commercial

production crystalline

silicon PV modules with

12.5 percent conversion

efficiency.

Verify, with standard

laboratory measurements,

U.S.-made commercial

production thin-film PV

modules with 10 percent

conversion efficiency.

[MET]

FY 2005 Results FY 2006 Targets FY 2007 Targets

Photovoltaic Energy Systems

Verify, using standard

laboratory measurements, a

conversion efficiency of 13.5

percent of U.S.-made,

commercial crystalline silicon

PV modules. Production cost

of such modules is expected

to be $1.95 per Watt. [MET]

Develop thin-film PV

modules with an 11.0-percent

conversion efficiency that are

capable of commercial

production in the U.S. [MET]

Verify, using standard

laboratory measurements, a

conversion efficiency of

13.8 percent of U.S.-made,

commercial crystalline

silicon PV modules.

Production cost of such

modules is expected to be

$1.90 per Watt.

Develop thin-film PV

modules with an 11.2-

percent conversion

efficiency that are capable

of commercial production

in the U.S.

Verify, using standard

laboratory measurements, a

conversion efficiency of

14.5 percent of U.S.-made,

commercial crystalline

silicon PV modules.

Production cost of such

modules is expected to be

$1.80 per Watt.

Develop thin-film PV

modules with an 11.8-

percent conversion

efficiency that are capable

of commercial production

in the U.S.

Each DOE program submits quarterly and annual Joule targets into a centralized database.

Progress toward their target achievement is monitored throughout the fiscal year by an

independent DOE Office.

26

Overview of Evaluation Methods for R&D Programs

Joule includes an external auditing mechanism. A set of randomly-selected program targets are

chosen by DOE for auditing which may include, for example, the review of completed technical

reports to verify that a stated target has been met according to defined criteria. Joule also provides

color ratings (green, yellow, and red) to give a quick look display of its overall assessment results

– green (100 percent of a target or goal is met), yellow (80-99 percent is met) and red (unmet if

(<80 percent is met), Programs’ progress against its Joule performance measures is publicly

reported in the annual DOE Performance and Accountability Report.

The EIS is a performance reporting and analysis tool. It is a central repository that provides

integrated project and program level information to EERE Senior Management and program staff.

The EIS integrates many separate databases containing performance information. It aligns key

financial, portfolio, schedule and other information. Its design enables it to have analysis

capability for use in creating quick and ready performance reports and for analysis of performance

trends (at program level or across the entire EERE portfolio). Figure 2-1 shows a screen shot of

the EIS Dashboard – the portal to entry to the EIS system.