A Student’s Guide to

Interpreting SPSS Output

for Basic Analyses

These slides give examples of SPSS output with notes about

interpretation. All analyses were conducted using the Family

Exchanges Study, Wave 1 (target dataset)

1

from ICPSR. The

slides were originally created for Intro to Statistics students

(undergrads) and are meant for teaching purposes only

2

. For

more information about the data or variables, please see:

http://dx.doi.org/10.3886/ICPSR36360.v2

3

1

Fingerman, Karen. Family Exchanges Study Wave 1. ICPSR36360-v2. Ann Arbor, MI: Inter-university Consortium for Political

and Social Research [distributor], 2016-04-14. http://doi.org/10.3886/ICPSR36360.v2

2

The text used for the course was The Essentials of Statistics: A Tool for Social Research (Healey, 2013).

3

Some variables have been recoded so that higher numbers mean more of what is being measured. In those cases, an “r” is

appended to the original variable name.

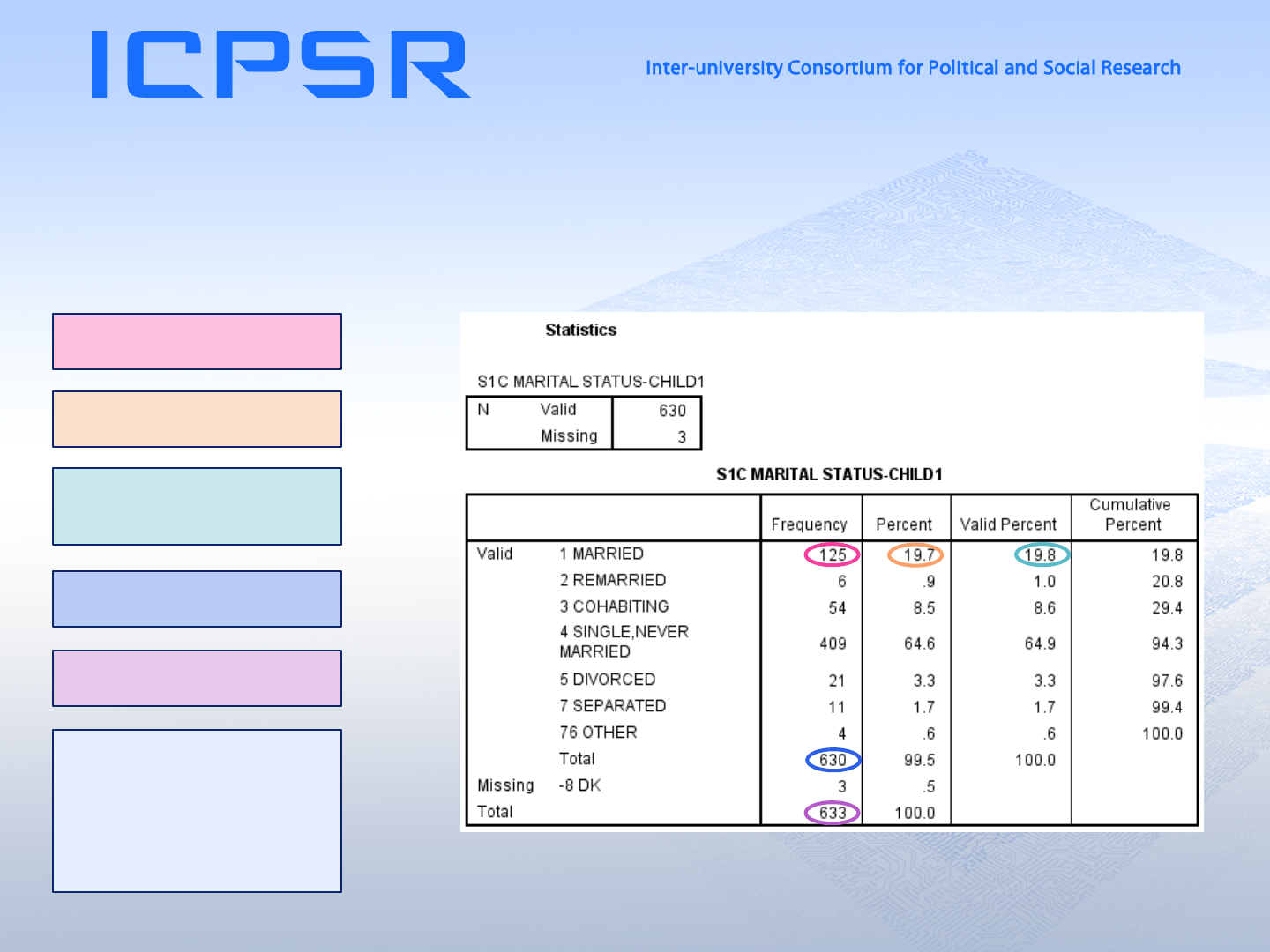

Frequency Distributions

Frequencies show how many people fall into each answer category on a given question

(variable) and what percentage of the sample that represents.

Total number of people in the

survey sample

Number of people with valid (non-

missing) answers to the question

Percent of the total sample who

answered that “child1” was married

Number of people who responded

that “child1” was married

Percent of those with non-missing

data on this question who answered

that “child1” was married

Cumulative percent adds the

percent of people answering in one

category to the total of those in all

categories with lower values. It is

only meaningful for variables

measured at the ordinal or

interval/ratio level.

Crosstabulation Tables

“Crosstabs” are frequency distributions for two variables together. The counts show how

many people in category one of the first variable are also in category one of the second and

so on.

Number of people who answered that

their children are biologically related to

them and that their children need less

help than others their age.

Marginal: Total number of people who

answered that their children need more

help than others their age.

Marginal: Total number of people who

answered that all of their children are

biologically related to them.

Marginal: Total number of people who

had valid data on both D34r and A1A.

Crosstabulat ion Tables (Column %)

Crosstabs can be examined using either row or column percentages and the interpretation

differs depending on which are used. The rule of thumb is to percentage on your

independent variable.

Marginal: Percent of sample who feel that

their children need less help than others

their age.

Percent of the sample whose children are

all biologically related to them that said

their children need less help than others

their age.

Marginal: 100% here tells you that you’ve

percentaged on columns.

Can you interpret this number?

(17.6% of those whose children were not

all biologically related to them felt their

children needed more help than others

their age.)

Crosstabulation Table (Row %)

Percent of those who said their children

need more help than others their age

whose children are all biologically

related to them.

Marginal: Percent of the sample whose

children are biologically related to them.

Marginal: 100% here shows that you are

using row percentages.

Can you interpret this number?

(17.4% of those who said their children

need about the same amount of help as

others their age had children who were

not all biologically related to themselves.)

Chi Square (

)

Based on crosstabs,

2

is used to test the relationship between two nominal or ordinal

variables (or one of each). It compares the actual (observed) numbers in a cell to the

number we would expect to see if the variables were unrelated in the population.

Actual count (f

o

).

Count expected (f

e

) if variables were unrelated

in pop.

f

e

=

value (obtained) =

This could be

compared to a critical value (with the degrees

of freedom) but the significance here tells you

that there appears to be a relationship between

the perceived amount of help needed and

whether the children are related to the R.

Degrees of freedom = ((# rows – 1)(# col – 1))

The

2

test is sensitive to small expected

counts, it is less reliable if f

e

is < 5 for multiple

cells.

Independent-samples T-test

A test to compare the means of two groups on a quantitative (at least ordinal, ideally

interval/ratio) dependent variable. Acomputed variable (dmean_m) exists in this dataset

that is the average amount of support R offers mother across all domains (range 1-8); that

will be the dependent variable.

The actual mean and standard

deviation of dmean_m for each of the

groups.

The number of females and males who

have non-missing data for dmean_m.

T-value for the difference between 4.7233 and

4.3656. t

=

where

=

+

.

(Note, as long as the sig. of F is ≥ .05, use the

“equal variances assumed” row, for reasons

beyond the scope of these slides).

Significance (p) level for t-statistic. If p ≤ .05, the

two groups have statistically significantly different

means. Here, females provide more support to

their mothers, on average, than do males.

Confidence interval for the difference between

the two means. If CI contains 0, the difference

will not be statistically significant.

Paired-samples T-test

Like the independent-samples t-test, this compares two means to see if they are significantly

different, but now it is comparing the average of same people’s scores on two different

variables. Often used to compare pre- and post-test scores, time 1 and time 2 scores, or, as in

this case, the differences between the average amount of help Rs give to their mothers

versus to their fathers.

Mean amount of support R provided to mothers.

Mean amount of support R provided to fathers.

Number of cases with valid data for both

variables. SPSS also gives the correlation

between the two dependent variables, that was

left off here for space.

The difference between the average amount of

support provided to mothers and fathers and

accompanying standard deviation.

T-statistic for the difference between the two

means and the significance. In this sample,

respondents provide significantly more support

to their mothers than to their fathers.

Oneway ANOVA

Another test for comparing means, the oneway ANOVA is used when the independent

variable has three or more categories. You would typically report the F-ratio (and sig.) and

use the means to describe the groups.

Number of cases in each group of the

independent variable.

Average amount of support provided (and

standard deviation) by those with incomes

< $10k.

Confidence Interval: the range within which

you can be 95% certain that the group’s

mean falls for the population.

Average amount of support provided by all

521 people with valid data on dmean_m.

F-statistic (and associated p-value) test the null hypothesis that all groups have the same

mean in the population. A significant F means that at least one group is different than the

others. Small within groups variance and large between groups produces a higher F –value: F=

. Here we see that at least one group’s mean amount of support is

significantly different than the others. Additional (post-hoc) tests can be run to determine

which groups are significantly different than each other.

Variability of means between the groups:

Mean Square Between =

where SSB=

(

)

2

and dfb = k-1. (k is number

of groups;

is # people in a given group;

is mean for that group)

Variability within each group: Within

Groups Mean Square =

where SSW =

SST-SSB and dfw = N-k.

Total Sum of Squares (SST) = SSB + SSW

Correlation

Pearson’s r: measures the strength and direction of association between two quantitative

variables. Matrices are symmetric on the diagonal.

Correlation coefficient (r) tells how strong

the relationship is and in what direction.

Range is -1 to 1, with absolute values closer

to 1 indicating stronger relationships. Here,

the frequency of visits is moderately related

to the amount of emotional support given to

the mother; more visits correlate with more

frequent emotional support.

r =

(

)(

)

[

(

)

(

)

Significance (p) tells how certain you can be

that the relationship displayed is not due to

chance. Typically look for this to be ≤ .05.

N=sample size: this number may be different

in each cell if missing cases are excluded

pairwise rather than listwise.

Can you interpret this number? (How

often mother forgets to ask about R’s life is

negatively, albeit weakly, related to the

amount of emotional support R gives mother

– If mother forgets to ask a great deal of the

time, she gets less emotional support from

R.)

Bivariate Regression (model statistics)

Examines the relationship between a single independent (“cause”) variable and a

dependent (outcome) variable. While it’s good to look at all numbers, the ones you

typically interpret/report are those boxes marked with an * (true for all following slides).

Regression line: = + .

Coefficient of determination (R

): the amount of

variance in satisfaction with help given to mother that

is explained by how often the R saw mother. R

2

= (TSS

– SSE)/ TSS. *

Multiple correlation (R): in bivariate regression, same

as standardized coefficient

Independent (predictor) and Dependent variables.

F-value (and associated p-value) tells whether model is

statistically significant. Here we can say that the

relationship between frequency of visits with ones

mother and satisfaction with help given is significant;

it is unlikely we would get an F this large by chance. *

Residual sum of squared errors (or Sum of Squared

Errors, SSE) =

( )

.

Total Sum of Squares (TSS) =

( )

.

Bivariate Regression (coefficients)

Standardized coefficient (β): influence of x on

y in “standard units.”

Confidence Interval – the slope +/- (critical

t-value * std. error) – shows that you can

be 95% confident that the slope in the

population falls within this range. If range

contains 0, variable does not have an

effect on y.

Standard error of the estimate: divide the

slope by this to get the t-value. *

Y-intercept (a): value of y when x is 0.

Slope (b): how much y chances for

each unit increase in x. Here, for every

additional “bump up” in frequency of

visits, satisfaction with the amount of

help given to mother increases by

.157. *

T-statistic (and associated p-value) tells

whether the individual variable has a

significant effect on dependent variable. *

The regression equation (for this model

would be = 1.770 + .157().

Multiple Regression (OLS: model statistics)

Used to find effects of multiple independent variables (predictors) on a dependent variable.

Provides information about the independent variables as a group as well as individually.

Regression line:

= a +

+

+

…

R= multiple correlation. The association between the group of

independent variables and the dependent variable. Ranges from 0-1.

R

2

and Adjusted R

2

– how much of the variance in satisfaction with

amount of help R provided mother is explained by the combination of

independent variables in the model. Also called “coefficient of

determination.” R

2

= (TSS – SSE)/ TSS. Adjusted R

2

compensates for the

effect that adding any variable to a model will raise the R

2

to some

degree. About 14% of the variance in satisfaction is accounted for by

financial and emotional support, seeing mother in person, and whether

mother makes demands on R. *

Residual sum of squared errors (or Sum of Squared Errors, SSE) =

( )

.

Total Sum of Squares (TSS) =

( )

.

F-value and associated p-value tells whether model is statistically

significant (chance that at least one slope is not zero in the population).

This combination of independent variables significantly predicts

satisfaction with amount of help R gives mother. *

Df2 = # of cases – (# of independent variables +1)

Df1 = # of independent variables

Multiple Regression (coefficients)

Y-intercept (a): value of y when all Xs are 0.

Slope (b): how much satisfaction changes for

each increase in frequency of visiting mother. *

Standard error of the estimate: divide the

slope by this to get the t-value. *

Zero-order correlation = Pearson’s r. Bivariate

relationship between frequency of visits and

R’s satisfaction w/ help R’s given to mother.

Partial correlation: bivariate relationship

between frequency of visits and R’s

satisfaction, controlling for demands mother

makes and emotional/financial support given.

T-statistic (and associated p-value) tells whether the

individual variable has a significant effect on

dependent variable, controlling for the other

independent variables. *

Regression Equation: = 1.477 + .130 b3ar + .195(m_6) .009 d1r + .033(d22r)

Standardized coefficient (β): influence of x on y in

“standard units.” Can use this coefficient to

compare the strength of the relationships of each

of the independent variables to the dependent.

Largest β = strongest relationship, so here

frequency of visits has the strongest relationship to

satisfaction, all else constant.

OLS with Dummy Variables

Using a categorical variable broken into dichotomies

(e.g., race recoded into white, black, other with each

coded 1 if R fits that category and 0 if not) as

predictors. In this case, the amount of help R

perceives his/her adult child to need was recoded

into 1 = “more than others” and 0 = “less or about

the same as others.” If the concept is represented

by multiple dummy variables, leave one out as the

comparison group (otherwise there will be perfect

multicollinearity in the model).

Slope is interpreted as the amount of difference between the “0”

group and the “1” group. Here, those who perceive their

children needing more help than their peers are .321 more

satisfied with the amount of help they give their mother than

those whose children require less help, controlling for frequency

of visiting mother, demands made, and emotional and financial

support provided (and the difference is statistically significant).

Dummy variable. Children who need less or the same amount of

help as their peers is the reference category (0).