0

Do Online Friends Bring Out the Best in Us?

The Effect of Friend Contributions on Online Review Provision

Zhihong Ke

zke@clemson.edu

Clemson University

De Liu

deliu@umn.edu

University of Minnesota

Daniel J. Brass

daniel.brass@uky.edu

University of Kentucky

Accepted at Information Systems Research

May 2020

1

Abstract

User-generated online reviews are crucial for consumer decision-making, but suffer from under-

provision, quality degradation, and imbalances across products. This research investigates whether “friend

contributions cues,” in the form of highlighted reviews written by online friends, can motivate users to write

more and higher-quality reviews. Noting the public-good nature of online reviews, we draw upon theories

of pure altruism and competitive altruism to understand the effects of friend-contribution cues on review

provision. We test our hypotheses using data from Yelp, and find positive effects of friend contribution

cues. Users are three times more likely to provide a review after a recent friend review than after a recent

stranger review, and this effect cannot be solely explained by homophily. Furthermore, reviews written

after a friend’s review tend to be of higher quality, longer, and more novel. In addition, friend reviews tend

to have a stronger effect on less-experienced users and less-reviewed products/services, suggesting friend-

contribution cues can help mitigate the scarcity of contributions on “long-tail” products and from infrequent

contributors. Our findings hold important implications for research and practice in the private provision of

online reviews.

Keywords: Online reviews, online friends, public goods, competitive altruism, contribution quality

2

1. Introduction

User-generated online reviews have become a dominant source of information for consumers.

According to a 2018 report by BrightLocal, 85 percent of consumers said that their buying decisions were

influenced by online reviews (BrightLocal 2018). Prior research consistently shows that increasing the

volume of online reviews has a positive influence on product sales (e.g., Dellarocas et al. 2007; Duan et al.

2008; Forman et al. 2008). Therefore, it is of practical importance for vendors and online review platforms

(ORPs) to attract many user-generated reviews.

In reality, however, online reviews are under-provisioned (Fortune 2016; Goes et al. 2016). Studies

estimate that only 1 percent of consumers have ever written an online review (Anderson and Simester 2014;

Yelp 2011). This is not entirely surprising because online reviews are privately-provisioned public goods;

consumers have strong incentives to free-ride on the contributions of others, leading to under-provision.

The contribution to online reviews is also highly imbalanced across products (Burtch et al. 2018; Dellarocas

et al. 2010; Tucker and Zhang 2007). For example, only 2.2 percent of restaurants on Yelp receive more

than 13 reviews per month, whereas more than 30 percent receive no review (Luca 2016). Adding to these

concerns is a rapid decline in quality: the average length of online reviews has decreased from 600

characters in 2010 to just over 200 characters in 2017 (Liu et al. 2007; Mudambi and Schuff 2010;

ReviewTrackers 2018). To address these issues, ORPs have used several approaches, including offering

coupons, discounts, and other financial incentives to motivate review contributions.

1

Recent research

suggests that such tangible rewards are effective, but often have downsides such as resulting lower quality

reviews and eroding consumer trust (Burtch et al. 2018; Ghasemkhani et al. 2016; ReviewMeta.com 2016;

Stephen et al. 2012).

Our research explores a new “friend-contribution-cue” approach – i.e., motivating review contributions

by highlighting reviews written by one’s online friends. The friend-contribution-cue approach is applicable

1

For example, Epinions employed a revenue-sharing strategy with reviewers to encourage review generation. Amazon once offered

free products to top reviewers and allowed product owners to offer free or discounted products to reviewers in exchange for their

reviews, but discontinued this practice under criticism.

3

when ORPs support social networking among users.

2

Such ORPs can highlight the contributions of a user’s

online friends. For example, Yelp shows friend reviews on top of other reviews on business pages and

users’ homepages. Highlighting friend reviews may aid users in their discovery of new products and

services, but its effects on users’ contribution behavior are unexplored. We ask the following questions in

this research:

Can friend-contribution cues motivate a user to contribute a new review?

Can friend-contribution cues lead to higher quality reviews?

Readers familiar with the social influence literature may assume that friend contributions increase

users’ own contributions. Prior research has found that friends generate a positive social influence in

private-goods domains such as the adoption of paid music and store check-ins (Bapna and Umyarov 2015;

Liu et al. 2015; Qiu et al. 2018; Zhang et al. 2015). However, the public-goods nature of online reviews

suggests a countervailing free-riding effect may exist: One user’s contribution may substitute for another’s,

especially between online friends who are likely to hold similar opinions (Lee et al. 2016; Underwood and

Findlay 2004). This free-riding effect has been noted in other public-goods contexts in the forms of social

loafing, “volunteer’s dilemma,” and “bystander” effects (Darley and Latané 1968; Diekmann 1985; Karau

and Williams 1993). Because of free-riding, it is unclear whether friend contributions lead to more or fewer

reviews, especially considering that review writing is time-consuming and requires certain expertise. In

addition, we do not yet know the effect of friend contributions on contribution quality or the distribution of

reviews across products and users.

The friend-contribution-cue approach, if proven effective, can complement existing research studying

social influences in online review writing behavior. Our approach differs from an alternative social-cue

approach highlighting aggregate contributions (e.g., “3,786 users have recently contributed online

reviews”) (Burtch et al. 2018). Such aggregate-contribution cues fail to induce more reviews, although they

may increase review lengths (Burtch et al. 2018). The friend-contribution cue operates very differently from

2

Throughout the paper, we define a user as a registered member of an ORP because only registered members are allowed to make

online friends and post reviews.

4

the aggregate-contribution cue. The former uses specific contributions by online friends, whereas the latter

uses aggregate contributions by anonymous peers. As a result, friend-contribution cues are more personally

relevant and targeted. Notwithstanding these differences, the two forms of social cues can be used together.

We further differentiate our study from research on how ratings of friends affect subsequent ratings. Wang

et al. (2018) focuses on whether one’s friends’ average ratings influences the focal users’ ratings (provided

that the focal users also provide ratings). It does not address a user’s likelihood of offering a review or the

quality of the review, as we do in our research.

We extend theories of public goods to understand the effects of friend-contribution cues on the quantity

and quality of online review provision. One theory, called “pure altruism,” holds that users contribute

because they value the welfare of others; it implies that a friend’s contribution would substitute for a focal

user’s contribution because of diminishing marginal benefits of an additional contribution to the public.

Another theory, called “competitive altruism,” holds that users make altruistic contributions to gain status

in a community; thus, a friend’s contribution could stimulate further contribution by signaling a relevance

of contribution and a favorable audience for such contributions. Building on these countervailing

arguments, we develop hypotheses about the effects of friend-contribution cues on one’s own contribution

in terms of both quantity and quality.

We test our hypotheses using a unique dataset of restaurant reviews from Yelp. Yelp provides extensive

social networking features among users and highlights friends’ reviews on business pages and users’

homepages. In addition, Yelp lets users vote on each other’s reviews and nominate outstanding users to

become “elites”, who enjoy many perks such as free dinner parties and tasting events. We assemble a user-

restaurant-week panel of review contributions from 2,923 users toward 8,289 restaurants in the state of

Washington over a period of 36 weeks. Using this panel, we formulate a discrete hazard model of a user’s

likelihood of reviewing a restaurant in a given week as a function of the number of friend reviews for the

restaurant in the preceding week. We use this model to examine the effect of friend-contribution cues and

how it varies with restaurant and user characteristics. We also study the effect of friend-contribution cues

on review quality, which we measure using the number of votes received by the review, independent quality

5

ratings by Amazon Mechanical Turk workers (henceforth Turker Ratings), and a review novelty score based

on the review’s content.

One of the challenges in estimating the effect of friend-contribution cues is the potential confound of

the homophily effect; that is, two friends write reviews on the same restaurant because they have similar

preferences. We control for the homophily effect using a user’s future friends: future friends have similar

preferences as the user, but their reviews may not influence the user’s contribution decisions. Therefore,

the effect of future-friends’ reviews, which is driven by homophily alone, can be used as a proxy for the

homophily effect.

2. Related Literature

In the following, we discuss the relation of this research to two literature streams – provision of online

reviews and voluntary provision of public goods – with a focus on the role of social influence in each case.

2.1. Provision of Online Reviews

The online review literature has approached the provision of online reviews from three perspectives:

valence, volume, and quality. A review’s valence refers to the tone of the review as typically measured by

the associated numeric rating. Research in this stream reveals that social factors including peer ratings (Lee

et al. 2015; Ma et al. 2013; Sridhar and Srinivasan 2012) and friend ratings (Lee et al. 2015; Wang et al.

2018) may impact review valence. Lee et al. (2015) demonstrate that higher peer ratings induce users to

also provide high ratings. Wang et al. (2018) study the effect of friend ratings on the valence of book

reviews and find that users tend to give similar ratings as their friends. As a result, they suggest that ratings

following after friends’ ratings are more biased. Studies of review valence focus on whether a review leans

positive or negative rather than on the likelihood of contribution and contribution quality.

Research on the volume of online reviews shows that characteristics of the product (Dellarocas et al.

2010), a user’s consumption experience (Dellarocas and Narayan 2006), and reviewer characteristics (Goes

et al. 2014; Moe and Schweidel 2012) can all affect the quantity (or likelihood) of review provision. Within

this stream, a few studies examine how ORPs can increase the volume of online reviews using financial or

social incentives. Burtch et al. (2018) show that financial incentives increase review volume but not length.

6

Financial incentives often lead to undesirable side effects such as lower quality reviews and eroding

consumer trust (Burtch et al. 2018; Ghasemkhani et al. 2016; Stephen et al. 2012).

Burtch et al. (2018) and Chen et al. (2010) study the effect of social cues on the volume of reviews.

Chen et al. (2010) demonstrate that, after being shown the median number of rating contributions, users

below (above) the median increase (decrease) their contributions. Burtch et al. (2018) show that aggregate

contribution cues increase review length but not volume. However, when combining financial incentives

with aggregate-contribution cues, one could increase both review length and the volume of reviews. As

noted in the introduction, the aggregate- and friend-contribution cues operate quite differently and thus may

be used independently.

The literature on review quality concentrates on the association between textual features of reviews and

the number of “helpfulness” votes it receives, an often-used proxy for review quality (Ghose and Ipeirotis

2011; Mudambi and Schuff 2010; Yin et al. 2014). This stream also examines the relationship between

contextual factors, including product type and reviewer characteristics, and review quality (Lu et al. 2010;

Mudambi and Schuff 2010). It, however, does not focus on the issue of how to promote review quality,

with the exception of Burtch et al. (2018) who examines the effects of financial incentives and aggregate-

contribution cues on review length, a correlate of review quality.

2.2. Social Influence and the Voluntary Provision of Public Goods

Prior research finds evidence of social influence among friends in many private-goods domains,

including adoption of paid music services and products (Bapna and Umyarov 2015; Zhang et al. 2015),

music consumption (Dewan et al. 2017), store check-ins (Qiu et al. 2018), and peer-to-peer lending (Liu et

al. 2015).

3

As mentioned earlier, social influence in the private provision of public goods is different

because of the free-riding tendency. In what follows, we focus on social influence in public-goods domains.

3

In addition, the social network literature has many examples of friend performance positively affecting an individual’s

performance (e.g., in school and workplace settings) (Altermatt and Pomerantz 2005; Cook et al. 2007). Again, free-riding does

not typically arise in these settings and some of the underlying mechanisms such as observational learning (e.g., modeling high

levels of participation in teacher–student interactions) and social support (e.g., receiving help and guidance on homework

assignments) may not apply to our context.

7

One stream of research investigates the effect of peer contributions in “electronic communities of

practice,” such as online discussion forums, Q&A forums, and knowledge-sharing communities (Wasko et

al. 2009; Wasko and Faraj 2005). Contributions to these forums have characteristics of public goods, but

they tend to disproportionately benefit people involved in a conversation (e.g., information seekers).

Because of the directed nature of such contributions, researchers have relied on reciprocity theories to

explain the effect of peer contributions (Jabr et al. 2014; Xia et al. 2011). The reciprocity theories may not

apply in online reviews because online reviews benefit a broad audience rather than specific individuals.

Perhaps more relevant to this research is the literature on charitable contributions, which are a form of

private provision of public goods. This stream widely acknowledges that peer contributions can potentially

crowd out one’s own contributions. For example, Tsvetkova and Macy (2014) show that observing others’

helping behavior decreases one’s own helping. However, findings are mixed on whether there is a positive

or negative relationship between the contributions of others and one’s own contribution (Shang and Croson

2009). To account for the positive relationships, this literature offers several informal explanations,

including conformity (Bernheim 1994), achieving social acclaim (Vesterlund 2006), gaining social

approval, and peer contribution as a signal of a charity’s quality (Vesterlund 2003). The charitable giving

literature has not examined the role of (online) friend contributions. Furthermore, online reviews are distinct

from charitable giving in at least two dimensions: online reviews may reflect one’s intelligence and skills,

and there are user communities for online reviews.

3. Theoretical Background and Hypotheses

In this section, we develop the hypotheses for the effect of friend contribution cues (or friend

contributions for short, provided that they are highlighted). Using restaurant reviews as an example, we

examine how the addition of a friend review, relative to that of a stranger review, affects a focal user’s

contribution in terms of probability of contribution and review quality. We will focus on friends’ recent

contributions because we expect a decay effect (that we later confirm): the chance of a user acting on a

friend’s review while it is fresh is much higher than when the friend review has been posted for a long time.

We note that it is common for studies of online reviews to focus on recent stimuli (Dellarocas et al. 2010;

8

Duan et al. 2008; Wang et al. 2018). We also limit ourselves to contributions to the same restaurant because

we do not have a good way of attributing other-restaurant contributions to the focal friend reviews.

3.1. Effect of Friend Contributions on Review Quantity

To understand users’ contribution behavior under the influence of friend contributions, we draw upon

theories of private provision of public goods. Though social influence theories such as social learning and

normative social influence may seem relevant (Aral and Walker 2011; Iyengar et al. 2011), we choose

theories of public goods as an overarching theoretical framework for two main reasons.

4

First, social-

influence theories are useful for explaining social contagion in the diffusion of products, services, and ideas,

but they do not provide an explanation of why an individual contributes to public goods in the first place.

Thus, they are incomplete for explaining contributions to public goods. Zeng and Wei (2013) made a similar

observation when studying how social ties affect similarities of photos uploaded to Flickr. Second, most

social influence theories do not address contribution quality, which is not an issue in adoption settings but

is one of the important goals of this research.

We first draw upon a well-known theory of pure altruism, which suggests that individuals make

altruistic contributions because they value not only their own welfare but that of others (Andreoni 1989,

1990). This theory is consistent with the idea that one of the main motivations for writing an online review

is to help others make a better purchase decision (Dichter 1966; Hennig-Thurau et al. 2004). There is also

neural evidence in support of pure altruism – peoples’ neural activity in value/reward areas correlates with

their rate of actual charitable donations (Harbaugh et al. 2007; Hubbard et al. 2016).

The theory of pure altruism leads to an important consequence for peer contributions (Tsvetkova and

Macy 2014): As peers contribute more to public goods, an additional unit of contribution adds less value to

the collective good and, therefore, an individual has less incentive to contribute. Such a substitution effect

has been documented in contexts such as charitable contribution (Shang and Croson 2009; Tsvetkova and

4

Social influence and theories of public goods do interact, especially in the case of competitive altruism theory. Later, when

deriving implications of competitive altruism for the effect of friend contributions, we do invoke arguments similar to social

influence theories: e.g., we argue that friend contributions signal relevance (similar to the arguments of social learning) and social

desirability of such contributions (similar to the arguments of normative social influence), though we place such arguments in the

framework of competitive altruism.

9

Macy 2014; Witty et al. 2013). The substitution effect can be further amplified when the peer contribution

is a friend contribution. This is because online friendships tend to form around shared interests and opinions

(Dey 1997; Moretti 2011). When a user sees a friend review on a restaurant, compared to a stranger review,

the user is more likely to consider writing her own review as redundant. Therefore, she is less likely to offer

a review of the same restaurant. In sum, the pure altruism theory of public goods would suggest that a friend

review, relative to the stranger review, would reduce a user’s own contribution.

Another theory of public goods, called competitive altruism, holds that people contribute to public

goods not because of their genuine concern for others, but to gain status in a social group that rewards

individuals based on their relative contribution and commitment to the group (Hardy and Van Vugt 2006;

Roberts 1998; Willer 2009).

5

Recognizing that individuals may lack the motivation to contribute to public

goods, the group as a whole has the incentive to collectively reward people who make outstanding altruistic

contributions (e.g., by granting such individuals prestige, trust, and preferential treatment in partner

selection). One such example is the peer review of journal submissions: Academic communities use best-

reviewer awards, recognition by journal editors, and promotion to editorial positions to motivate voluntary

peer reviews. Competitive altruism holds that in a community that associates status rewards with

outstanding altruistic behaviors, individuals would compete to make altruistic contributions in ways that

suggest a high level of competence, generosity, and commitment (Hardy and Van Vugt 2006; Willer 2009).

Existing research in online reviews lends support to the theory of competitive altruism: survey studies

consistently show that an important motivation for writing online reviews is the pursuit of attention, status,

and superiority (Huang et al. 2016; Pan and Zhang 2011; Wang et al. 2017).

Compared to pure altruism, competitive altruism holds a very different implication for the effect of

friend contributions. Research suggests that social contacts are especially helpful for gaining status

(Anderson and Kilduff 2009). With a large social group, an individual’s altruistic contributions can easily

5

Although the term “competitive altruism” is relatively new, a few key elements of the theory (e.g., individual’s care for status as

a driving force for altruistic contribution) have been previously noted in Bernheim's (1994) theory of conformity and Milinski et

al.'s (2002) use of reputation to solve the tragedy of the commons. The theory’s predictions are also consistent with empirical

findings that status competition can provide strong motivations for voluntary giving (Donath 2002; Jones et al. 1997) and

contribution in online communities (Levina and Arriaga 2014; Wasko and Faraj 2005).

10

go unnoticed by random strangers. Online friends, on the other hand, are more likely to pay attention to a

user’s contribution and provide favorable appraisals because of their shared interests and personal

connection with the focal user. Therefore, online friends would be more helpful for a user’s pursuit of status.

In addition, friend contributions can serve as a “beacon” for altruistic contributions: Existing friend

contributions suggest that contributing a review of this restaurant is socially desirable and would enhance

the user’s commitment to her social group. In contrast, existing stranger contributions do not offer such

added benefits. Therefore, competitive altruism predicts a positive effect of friend contributions: A user is

more likely to offer her review after observing a friend review, compared to a stranger review.

Despite the different predictions of pure and competitive altruism, they may not be mutually exclusive.

A user motivated mainly by competitive altruism may still have concerns with being redundant after a

friend's contribution; conversely, a user whose main goal is to help others may still value the status-building

benefits of contributing after a friend. In our research context, though, we expect the effect of competitive

altruism to dominate because of a strong user community and status system on Yelp. First, the platform and

its users have invested strongly in community building. Second, as described earlier, Yelp has an elaborate

community-driven status system. Each year, the community selects new elite users based on community

votes and peer nominations. Lastly, Yelp and the user community provide enhanced status benefits. Elite

users not only enjoy prestige within the community, but also perks offered by store owners and/or the

platform. Even earning a local status can have benefits.

Even non-elite users can benefit from enhanced

status: e.g., they would have a higher chance of being invited to official events for all Yelp users and private

gatherings. Therefore, we expect the prediction of competitive altruism will prevail, leading to a positive

effect of friends’ reviews.

H1: Holding the total number of recent reviews constant, a user’s likelihood to review a restaurant

increases with the number of recent reviews posted by her friends on that restaurant.

In addition, we explored two variables that might moderate our main hypothesis: store popularity (as

measured by the number of existing reviews) and the user’s reviewing experience. We argue that pure and

11

competitive altruism can hold different implications for such moderating effects. Due to the exploratory

nature of our arguments, however, we do not offer formal hypotheses.

From the perspective of pure altruism, when a restaurant has more existing reviews, each additional

review adds less value. Though a friend review is a stronger substitute for the focal user’s review than a

stranger review, both have a diminishing effect as the restaurant has more existing reviews. Therefore, the

additional (negative) substitution effect of a friend review also diminishes with more existing reviews,

implying the effect of friend reviews to increase with the number of existing reviews. If the focal user has

more experience in writing reviews, her contribution would be higher, and the substitution effect of a friend

review would be weakened, suggesting an increase in the effect of friend reviews.

From the perspective of competitive altruism, when a restaurant has more reviews, contributing an

additional review after a friend is less helpful for status building. This is because such a contribution is less

distinctive and less helpful for the social group to distinguish itself (Levina and Arriaga 2014). Therefore,

the positive effect of contributing after a friend contribution is reduced. A more experienced user, because

of her higher status, is less eager to impress her friends. Therefore, we expect the positive effect of friend

contributions to be weaker for more experienced users.

In sum, pure and competitive altruism lead to different predictions on moderating effects. Again, noting

the strength of community and status system in our setting, we expect the predictions of competitive

altruism to prevail, though acknowledging that the forces of pure and competitive altruism may coexist.

3.2. Effect of Friend Contributions on Review Quality

Pure altruism theory suggests that, after a friend’s contribution, the marginal value of another

contribution decreases. This leads to a lower effort by the focal user, which could result in a lower quality

review. On the other hand, competitive altruism suggests that individuals contribute reviews as a way of

gaining status, and they will do so in ways that suggest a high level of competence and generosity (Anderson

and Kilduff 2009). When a user offers a review after a friend, she knows her friends will pay close attention,

so she will put in more effort to produce a high-quality review to impress her friends. In this way, friends

can bring out the best in the user. Similar to Hypothesis 1, we expect that the prediction of competitive

12

altruism will prevail in our context, leading to a positive effect of a friend contribution on subsequent

contribution’s quality, and such an effect would increase with the number of friend contributions.

H2: Holding the total number of recent reviews constant, the quality of a user’s review of a restaurant

increases with the number of recent reviews posted by her friends on that restaurant.

4. Research Context and Data

We collected our data from Yelp, one of the largest and most successful online review platforms in the

world. Yelp operates as a platform for user-generated reviews for local businesses such as restaurants and

schools. Only registered users can write reviews. Each registered user has a public profile that includes

information such as the user’s name, location, reviews written, friends, bookmarks, and compliments

received (See the Online Appendix for details). Yelp has extensive support for social networking. Users

can also vote on existing reviews (no login required) written by others on three dimensions: useful, funny,

and cool (Figure 1). Users can also follow other users and send compliments to them. A user can request to

become friends with other users.

6

Once the friend request is confirmed, users can receive updates on the

friend’s activities, such as the friend’s reviews and photos, via the “friends” section of their private

homepage (Figure 1a). Friend reviews will also appear on top of the review list on a business page (Figure

1b). Yelp does not send notifications of friend reviews to users.

To encourage contributions and community building, Yelp Elite Council selects elite reviewers each

year who are deemed stellar community members and role models. The selection is based on peer

nominations and take into account the quantity and quality (votes) of one’s contributions.

7

Elite users are

honored with a badge on their profile. Yelp elites enjoy many tangible benefits including invitations (with

guest passes) to free Yelp Elite events and tasting events organized by businesses.

6

Such “online friends” are typically formed based on shared personal interests, and use electronic connection and communication

as a primary form of interaction with each other (Dennis et al. 1998; Hiltz and Wellman 1997; Ridings and Gefen 2006).

7

According to a Yelp blog article (https://www.yelpblog.com/2012/01/what-makes-a-yelper-elite), Yelp does not have a published

checklist for its Elite criteria. Unofficial sources suggest that elite users are selected based on their last year’s review contributions

(both quantity and quality), and their engagement with the community, as reflected by their activities such as sending compliments,

casting votes, and answering questions. The Elite status is not permanent. A user must earn the Elite badge each year.

13

We collected data on restaurant reviews in the state of Washington (WA) between March 2013 and

November 2013.

8

To obtain a list of users in the WA area who write restaurant reviews, we started with all

551 elite users located in Seattle, WA, then obtained their friend lists, which resulted in 33,815 users.

Among the 33,815 elite users’ friends, we selected our study sample as those who were (1) located in WA

(11,637), and (2) active (i.e., wrote at least one review on WA restaurants) during our study period (3,630).

9

The resulting set of 2,923 users accounts for 78% of all users who meet the two criteria,

10

suggesting that

we have a fairly comprehensive list of users.

For each user in our study sample, we revisited the user’s profile and list of friends every month between

March 2013 and April 2014. We also collected all their reviews, bookmarks, and compliments received

since March 2012. To ensure that we had complete data on reviews, we separately collected a total of

109,402 reviews on all 8,289 WA restaurants generated during our study period.

5. Analysis on Review Quantity

5.1. Dataset, Model, and Variables

To test the effect of friend reviews on review quantity, we constructed a user restaurant period

(week) panel in the following way. First, we intersected the 8,289 WA restaurants with 2,923 users to obtain

24,228,747 user-restaurant pairs. Among all user-restaurant pairs, 18,387 user-restaurant pairs were events

(i.e., the user wrote a review for the restaurant during our study period). Because events were rare in our

data, we sampled all available events and a tiny fraction of nonevents, and used weighting to correct the

estimated coefficients (King and Zeng 2001). Specifically, we kept all events and randomly sampled five

times the number of events, without replacement, from available nonevents (we also tested sampling three

and seven times the number of events and obtained similar results). We then intersected the resulting

110,322 user-restaurant pairs with 36 periods to obtain 3,971,592 user-restaurant-period triples. Finally, we

8

We picked the WA area because the number of restaurants and the number of reviews per month in this area are close to the

average among 21 metropolitan areas featured on the front page of Yelp (Wang 2010).

9

A robustness test including inactive users yields consistent results (see the Online Appendix). It is worth noting that, even in the

current sample, there are cases where the focal user had not written any review before period t.

10

Over time, we collected all users who wrote a review on any of the 8,289 WA restaurants in our dataset, or who are either friends,

or friends of friends of the 551 elite users. Among the resulting 1,197,043 users at the end of our data collection, a total of 3,748

users were located in WA and had written at least one review on a WA restaurant during our study period.

14

dropped cases where users had already written a review for the given restaurant, and obtained 3,663,479

cases for our analysis.

Our dependent variable,

, is a binary indicator of whether user i wrote a review on restaurant

j in period t (i.e., whether user i survives in period t). Because a user can submit at most one review per

restaurant,

11

and the panel consisted of discrete periods, we adopted a discrete-time survival model for our

data, where an event is a review. The discrete-time survival model is equivalent to the logit model; the

discrete-time hazard is the odds of dying (i.e., writing a review) conditional on survival up to that point.

Logit models are known to sharply underestimate event probabilities in samples with less than 200

events (King and Zeng 2001). To avoid such a bias, we adopted Rare Event logit (ReLogit) (King and Zeng,

2001, 2002) and used logit as a backup.

A potential confound of friend-contribution effects is homophily: A pair of friends independently chose

to review the same restaurant because of their similar preferences. To control for homophily, we follow

Wang et al. (2018) to include the number of reviews written by future friends as a control. Future friends

share similar preferences with the focal user, but future-friend reviews would not have influenced the focal

user. Any effect of a future-friend review is a result of homophily only. If the effect of a current-friend

review exceeds that of a future-friend review, we can infer the influence of friend contribution beyond

homophily.

Formally, we assume the utility for user i to write a review on restaurant j in period t,

, is a function

of the numbers of reviews written by current friends (

), future friends

(

), and anyone (

) on restaurant j in period t-1, additional control

variables, and an i.i.d. random component

with a type-I extreme value distribution.

(1)

11

Yelp allows users to update their reviews at a later time, but such update incidents are rare. We focus on initial reviews because

we are interested in whether users decide to offer a review.

15

Control variables. We included an extensive list of control variables (see Table 1 for a description).

We first controlled for several user characteristics. Following Wang (2010), we controlled for the number

of compliments sent and received (#Compliments), and the number of friends (Log#Friends). We used the

number of reviews by the user in the last period (#SelfReviews) and the number of cumulative reviews by

the user up to the last period (Log#CumSelfReview) to control for a user’s tendency to write reviews. To

control for the life cycle of users on the platform, we included tenure on the platform (LogTenure). We also

controlled for a number of other user characteristics including elite status (Elite), gender (Female), and

estimated income (CityIncome).

12

The estimated income was approximated by the median household

income of the city where the user lives. We used the distance between users and restaurants to capture

geographical proximity (Dist).

We controlled for a number of restaurant characteristics that may affect a user’s review decision,

including the restaurant’s average rating (AvgRatingRestaurant), variance of existing ratings

(AvgVariRestaurant), and cumulative reviews (Log#CumReviews) because prior research suggested that

these affect the quantity of new reviews (Moe and Schweidel 2012). We also included price range (Price)

coded from levels 1 through 4 based on Yelp reported price ranges ($ to $$$$), whether the restaurant page

has been claimed by its owner (a claimed store more likely listens to online reviews, which may encourage

users to submit reviews) (Claimed), restaurant categories (16 latent category dummies), and whether the

restaurant was promoted by Yelp (Promoted). The variable Promoted indicates whether the restaurant was

featured in the Yelp weekly email to users in period t-1. This variable allows us to control for marketing

campaign effects. We coded restaurant categories by feeding documents of raw restaurant categories, one

per restaurant, into a Latent Dirichlet Allocation (LDA) algorithm to recover the underlying latent

categories (16 of them) and the mapping of restaurants into latent categories (See the Online Appendix for

12

We inferred gender from the users’ reported first names using Behind the Name’s database (https://www.behindthename.com)

that lists 21,100+ names and their genders. There are 134 cases where the first names were not in the database or gender ambiguous.

We asked two research assistants to independently code the 134 cases based on users’ profile photos. Among these, there were

eight cases where profile photos did not provide any gender information (e.g., foods, pets). The intercoder reliability was 0.95. We

also validated our automatically coded gender by randomly sampling 100 users and comparing them with manual coding based on

profile photos. The accuracy of automatic coding was 98%, which we deemed as adequate.

16

details). Finally, to control for temporal shocks to review quantity, we included month dummies. Table 1

provides summary statistics of the dataset.

5.2. Main Results on Review Quantity

Prior to estimating the models, we conducted collinearity tests and found no signs of collinearity (VIF

< 3). We estimated three models, starting with only control variables, then adding current friends’ reviews

and new reviews in the last period, and finally adding future-friends’ reviews. We ran both ReLogit and

logit models with weighting adjustments. The results are shown in Table 2. Because the results are

consistent across models, we omit Logit-1 and Logit-2 for brevity and report the results of ReLogit-3.

CurFrndReviews has a positive effect (OR = 2.95, p < 0.001, OR for odds ratio). FutFrndReviews also

has a positive effect (OR = 1.87, p < 0.001), but smaller than that of CurFrndReviews. An F-test comparing

the odds ratios for CurFrndReviews and FutFrndReviews is significant (F = 7.81, p = 0.005), indicating the

existence of friend effects beyond homophily. Thus Hypothesis 1 is supported.

Compared with current friend’s reviews, NewReviews, which captures the effect of stranger reviews,

has a much smaller effect (OR = 1.10, p < 0.001). The effect of CurFrndReviews (OR = 2.95) is comparable

to that of reviews promoted in Yelp’s weekly newsletters (OR = 2.73), suggesting a strong effect of friend

reviews. We further computed the predicted probabilities of focal users writing a review when

CurFrndReviews equals 0 (i.e., no friend review) and 1 (i.e., one friend review), holding all other predictors

at their means. We find that the probability of writing a review is three times higher when there is a friend

review, compared with no friend review (0.0000225/0.00000765).

The effects of most control variables are in the expected directions. Log#Compliments

has a positive

effect, suggesting that socially active users are more likely to provide reviews. Both #SelfReviews and

Log#CumSelfReview have a positive impact, demonstrating that productive users tend to write more

reviews. As expected, Elite and CityIncome have a positive effect, whereas LogTenure and Dist have a

negative effect. Log#Friends has a negative impact, suggesting that having more friends, while fixing the

number of friend reviews on the restaurant, is negatively associated with the user’s probability of reviewing

the restaurant. This finding is consistent with conformity: When a user observes that a larger proportion of

17

friends do not contribute, she is more likely to conform to the norm of not contributing (Carpenter 2004).

Consistent with Moe and Schweidel (2012), AvgRatingRestaurant and Log#CumReviews both have a

positive impact, demonstrating that users tend to review highly-rated and often-reviewed restaurants.

AvgVariRestaurant has a negative effect, suggesting that users are less likely to review the restaurants if

prior users have very different opinions. This is consistent with prior findings that consumers avoid visiting

restaurants with high uncertainty in quality (Wu et al. 2015). Promoted, Claimed, and Price all have a

positive impact.

In the last two columns of Table 2, we further show that the effect of friend reviews decreases with

store popularity (measured by log number of existing reviews, Log#CumReview) (a plot of this effect is

available in the Online Appendix) and the focal user’s reviewing experience (measured by log number of

past reviews, Log#CumSelfReview), suggesting the friend-contribution-cue approach has a strong effect for

“long-tail” restaurants and less-experienced users. We report several robustness tests in the Online

Appendix, including (a) three ways of validating that future-friends’ reviews are a good proxy for

homophily, (b) evidence that observed effects cannot be explained by awareness effects alone or by friends

going to restaurants together, (c) consistent results when including older friend reviews and inactive users.

6. Analysis on Review Quality

6.1. Effect of Friend Contributions on Votes

Review quality reflects a consumer’s evaluation of how useful a particular review is in assisting a

purchase decision. We used several different measures of review quality. The literature on review quality

has predominantly used helpfulness votes received by a review as a proxy for review quality (e.g., Burtch

et al. 2018; Otterbacher 2009; Wang et al. 2017). Following the literature, we first used votes to measure

review quality. Yelp has three kinds of votes: useful, funny, and cool. We constructed two vote-based

measures of the review quality: combined votes (LogCombinedVotes) and useful votes only

(LogUsefulVotes).

18

We constructed a user-restaurant panel consisting of users who have offered a review for the

restaurants. We used CurFrndReviews and NewReviews as independent variables and added ReviewAge to

control for the effect that older reviews have more time to get votes.

13

We also included many restaurant

attributes and dynamic user attributes as controls. We estimated a panel-OLS model with user fixed effects.

We first estimated a model with only control variables, then added CurFrndReviews and NewReviews.

Our fixed-effect panel-OLS results are reported in Table 3 (M1-M4). As shown in M2 and M4, the

coefficients for CurFrndReviews are positive and significant, suggesting that friend reviews have a positive

effect on the quality of review contributed by the focal user, supporting Hypothesis 2.

6.2. Effect of Friend Contributions on Turker Ratings of Review Quality

Because votes can be biased by extraneous factors unrelated to review quality, such as the order in

which reviews are displayed or the social relations between voters and the reviewer, we implemented an

alternative measure of review quality by asking Amazon Mechanical Turk workers, or “Turkers,” to rate

the quality of reviews on a 5-point scale (1 = low quality, 5 = high quality). Instead of rating all reviews,

which is costly, we selected carefully matched pairs of reviews. Specifically, we identified all users who

have written two reviews: one preceded by exactly one friend review in period t-1, no stranger review in

period t-1 and no friend review in prior periods (AfterFrndReview=1); one preceded by exactly one stranger

review in period t-1, no friend review in period t-1 and no friend review in prior periods

(AfterFrndReview=0). This design resulted in 52 users and 104 reviews. We obtained four Turker ratings

per review (see the Online Appendix for details). We ran an OLS model with user fixed-effects to control

for user-specific effects on review quality. Our results, reported in Table 4, show that the coefficient of

AfterFrndReview is positive and significant, suggesting that exposure to a friend review resulted in a higher-

quality review than exposure to a stranger review. This lends further support for Hypothesis 2.

13

Additionally, to ensure all reviews had enough time to gather votes, we collected the votes of all reviews 2 years after the most

recent reviews in our dataset were written.

19

6.3. Effect of Friend Contributions on Review Novelty

If a review’s content overlaps significantly with existing reviews, the review does not provide

additional information for consumers, and is judged to be of lower quality. To capture this dimension of

review quality, we calculated a novelty score, based on the cosine distance between the Latent-Semantic-

Analysis-based representations of the focal and prior reviews of the same restaurant (see the Online

Appendix for details). We replicated our analysis on votes with novelty score as the dependent variable.

Our results (Table 3, M5 and M6) show that CurFrndReviews has a positive effect on review novelty,

lending further support to Hypothesis 2. In the Online Appendix, we further show that our results hold if

we use review length as the dependent variable or include older friend reviews.

7. Discussion and Implications

Motivated by under-provision, quality degradation, and imbalances of online reviews, we investigate

whether an online review platform can use friend-contribution cues to motivate users to write more and

higher-quality reviews. We find friend contributions to have a positive effect on users’ tendency to

contribute and the quality of the resulting reviews. Users are three times more likely to provide a review

after a friend has written one on the same restaurant, and this effect cannot be solely explained by homophily

or awareness. Interestingly, friend reviews have a stronger effect on less-reviewed stores and less-

experienced users. Reviews written after a friend’s review are of higher quality, longer, and more novel.

7.1. Contributions to the Literature

This research makes two main contributions. First, building on theories of public goods, we developed

a novel theoretical understanding of users’ contribution behaviors under friend influence on online user

communities as Yelp. Pure altruism holds that contributions are motivated by concerns of others’ welfare

whereas competitive altruism holds that the pursuit of status can motivate altruistic contributions. We

extended these theoretical perspectives to study the effect of friend-contribution cues and obtained a few

distinct predictions that were supported by our empirical findings. These include: (1) users respond to

friend-contribution cues by increasing their own contributions, despite the incentive to free-ride; (2) the

20

effect of friend-contribution cues is stronger for less-reviewed restaurants and less-experienced users; (3)

friend-contribution cues lead to higher quality reviews. Overall, we found competitive altruism to be a

useful theoretical lens for understanding the private provision of public goods in an online community such

as Yelp. We believe such a theoretical perspective can offer new insights for other communities of user-

generated content.

Second, we contribute to the literature of online reviews by identifying a “friend-contribution-cue”

approach to promoting more and higher-quality reviews. Our approach complements the existing

approaches (Burtch et al. 2018; Chen et al. 2010) by allowing ORPs to leverage social relations among

users. Users who are exposed to reviews written by their online friends are three times more likely to offer

a review, and such a review tends to be longer, more novel, and generates more votes. Importantly, the

friend-contribution-cue approach is more effective for less-reviewed products/services and less-

experienced users, suggesting its potential for mitigating imbalances in online reviews and motivating

occasional contributors. These combined benefits address important gaps in existing approaches for

motivating reviewer contributions.

7.2. Managerial Implications

Our findings suggest that, to increase quantity and quality of review production, vendors and platforms

should leverage social networks among users by highlighting recent reviews contributed by their friends.

Our analysis suggests that the effect of friend contributions is comparable to the promoted reviews in Yelp’s

weekly newsletters (Table 2). For the most effective results, vendors and platforms should target

products/services that have few reviews and less-experienced users. Our results also suggest the value of

promoting/facilitating competitive altruism in the volunteer reviewer community. This might include: (1)

instituting a community-driven process for selecting outstanding contributors; (2) selecting the outstanding

contributors based on altruistic contributions and commitment; and, (3) offering complimentary

community-based rewards for outstanding contributors (e.g., dinner parties, tasting events, and privilege

within the community). One caveat when using friend reviews is Wang et al. (2018)’s finding that friend

21

influence may increase biases in review ratings. Platforms should be aware of such a potential downside

and take steps to mitigate it, such as by favoring independent reviews when aggregating ratings.

7.3. Limitations and Future Research

This study has several limitations. Although we have controlled for homophily and many other factors,

we cannot completely rule out the possibility of unobserved events driving both friend contributions and

focal users’ contributions. Randomized field experiments can help alleviate such concerns. Second, we do

not have data to further delineate the effects of friend contributions by stages of a user’s journey. We present

evidence that the observed effect cannot be explained by increased awareness alone, but further research is

needed. Third, our reliance on a popular snowballing-sampling approach may introduce biases, despite the

fact that our sample covers nearly 80% of target users. Fourth, we do not have data to measure whether the

users actually read the friend reviews. We present evidence that users only act on the friend review written

in the last period, not before. However, we do not know if this non-effect of the friend reviews prior to the

last period is because users read them but not are influenced by them, or if they do not read them. Further

research on this is needed. Fifth, though we believe competitive altruism provides the best holistic

framework for explaining our findings, there could be alternative theoretical explanations. As we earlier

noted (in footnotes 4 and 5), competitive altruism intersects with other theoretical traditions that can be

further differentiated in future research. Finally, with appropriate data, future research can extend this study

by examining how friend-contribution cues affect contribution to other restaurants.

References

Altermatt, E. R., and Pomerantz, E. M. 2005. “The Implications of Having High-Achieving Versus Low-

Achieving Friends: A Longitudinal Analysis,” Social Development (14:1), pp. 61–81.

Anderson, C., and Kilduff, G. J. 2009. “The Pursuit of Status in Social Groups,” Current Directions in

Psychological Science (18:5), pp. 295–298.

Anderson, E., and Simester, D. 2014. “Reviews without a Purchase: Low Ratings, Loyal Customers, and

Deception,” Journal of Marketing Research (51:3), pp. 249–269.

Andreoni, J. 1989. “Giving with Impure Altruism: Applications to Charity and Ricardian Equivalence,”

Journal of Political Economy (97:6), pp. 1447–1458.

Andreoni, J. 1990. “Impure Altruism and Donations to Public Goods: A Theory of Warm-Glow Giving,”

The Economic Journal (100:401), pp. 464–477.

Aral, S., and Walker, D. 2011. “Creating Social Contagion Through Viral Product Design: A Randomized

22

Trial of Peer Influence in Networks,” Management Science (57:9), pp. 1623–1639.

Bapna, R., and Umyarov, A. 2015. “Do Your Online Friends Make You Pay? A Randomized Field

Experiment on Peer Influence in Online Social Networks,” Management Science (61:8), pp. 1902–

1920.

Bernheim, B. D. 1994. “A Theory of Conformity,” Journal of Political Economy (102:5), pp. 841–877.

BrightLocal. 2018. “Local Consumer Review Survey.” (https://www.brightlocal.com/research/local-

consumer-review-survey).

Burtch, G., Hong, Y., Bapna, R., and Griskevicius, V. 2018. “Stimulating Online Reviews by Combining

Financial Incentives and Social Norms,” Management Science (64:5), pp. 2065–2082.

Carpenter, J. P. 2004. “When in Rome: Conformity and the Provision of Public Goods,” Journal of Socio-

Economics (33:4), pp. 395–408.

Chen, Y., Harper, M., Konstan, J., and Li, S. X. 2010. “Social Comparisons and Contributions to Online

Communities: A Field Experiment on MovieLens,” American Economic Review (100:4), American

Economic Review, American Economic Association, pp. 1358–1398.

Cook, T. D., Deng, Y., and Morgano, E. 2007. “Friendship Influences During Early Adolescence: The

Special Role of Friends’ Grade Point Average,” Journal of Research on Adolescence (17:2), pp. 325–

356.

Darley, J., and Latané, B. 1968. “Bystander Intervention in Emergencies: Diffusion of Responsibility.,”

Journal of Personality and Social Psychology (8:4p1), pp. 377–383.

Dellarocas, C., Gao, G., and Narayan, R. 2010. “Are Consumers More Likely to Contribute Online Reviews

for Hit or Niche Products?,” Journal of Management Information Systems (27:2), pp. 127–158.

Dellarocas, C., and Narayan, R. 2006. “A Statistical Measure of a Population’s Propensity to Engage in

Post-Purchase Online Word-of-Mouth,” Statistical Science (21:2), pp. 277–285.

Dellarocas, C., Zhang, X., and Awad, N. 2007. “Exploring the Value of Online Product Reviews in

Forecasting Sales: The Case of Motion Pictures,” Journal of Interactive Marketing (21:4), pp. 23–45.

Dennis, A. R., Pootheri, S. K., and Natarajan, V. L. 1998. “Lessons from the Early Adopters of Web

Groupware,” Journal of Management Information Systems (14:4), pp. 65–86.

Dewan, S., Ho, Y., and Ramaprasad, J. 2017. “Popularity or Proximity : Characterizing the Nature of Social

Influence in an Online Music Community,” Information Systems Research (28:1), pp. 117–136.

Dey, E. L. 1997. “Undergraduate Political Attitudes,” The Journal of Higher Education (68:4), pp. 398–

413.

Dichter, E. 1966. “How Word-of-Mouth Advertising Works,” Harvard Business Review (44:6), pp. 147–

160.

Diekmann, A. 1985. “Volunteer’s Dilemma,” Journal of Conflict Resolution (29:4), pp. 605–610.

Donath, J. 2002. “Identity and Deception in the Virtual Community,” Communities in Cyberspace,

Routledge.

Duan, W., Gu, B., and Whinston, A. 2008. “Do Online Reviews Matter?—An Empirical Investigation of

Panel Data,” Decision Support Systems (45:4), pp. 1007–1016.

Forman, C., Ghose, A., and Wiesenfeld, B. 2008. “Examining the Relationship Between Reviews and Sales:

The Role of Reviewer Identity Disclosure in Electronic Markets,” Information Systems Research

(19:3), pp. 291–313.

Fortune. 2016. “A Lack of Online Reviews Could Kill Your Business,” Fortune.

(http://fortune.com/2016/10/23/online-reviews-business-marketing).

Ghasemkhani, H., Kannan, K., and Khernamnuai, W. 2016. “Extrinsic versus Intrinsic Rewards to

Participate in a Crowd Context: An Analysis of a Review Platform,” Working Paper.

Ghose, A., and Ipeirotis, P. 2011. “Estimating the Helpfulness and Economic Impact of Product Reviews:

Mining Text and Reviewer Characteristics,” IEEE Transactions on Knowledge and Data Engineering

23

(23:10), pp. 1498–1512.

Goes, P. B., Guo, C., and Lin, M. 2016. “Do Incentive Hierarchies Induce User Effort? Evidence from an

Online Knowledge Exchange,” Information Systems Research (27:3), pp. 497–516.

Goes, P. O., Lin, M., and Yeung, C. A. 2014. “‘Popularity Effect’ in User-Generated Contents: Evidence

from Online Product Reviews,” Information Systems Research (25:2), pp. 222–238.

Harbaugh, W., Mayr, U., and Burghart, D. 2007. “Neural Responses to Taxation and Voluntary Giving

Reveal Motives for Charitable Donations,” Science (316:5831), pp. 1622–1625.

Hardy, C. L., and Van Vugt, M. 2006. “Nice Guys Finish First: The Competitive Altruism Hypothesis,”

Personality and Social Psychology Bulletin (32:10), pp. 1402–1413.

Hennig-Thurau, T., Gwinner, K., and Walsh, G. 2004. “Electronic Word-of-Mouth via Consumer-Opinion

Platforms: What Motivates Consumers to Articulate Themselves on the Internet?,” Journal of

Interactive Marketing (18:1), pp. 38–52.

Hiltz, S. R., and Wellman, B. 1997. “Asynchronous Learning Networks as a Virtual Classroom,”

Communications of the ACM (40:9), pp. 44–49.

Huang, N., Hong, Y., and Burtch, G. 2016. “Social Network Integration and User Content Generation:

Evidence from Natural Experiments,” MIS Quarterly (Forthcoming).

Hubbard, J., Harbaugh, W., and Srivastava, S. 2016. “A General Benevolence Dimension That Links

Neural, Psychological, Economic, and Life-Span Data on Altruistic Tendencies.,” Journal of

Experimental Psychology (145:10), pp. 1351–1358.

Iyengar, R., Bulte, C. Van den, and Valente, W. T. 2011. “Opinion Leadership and Social Contagion in

New Product Diffusion,” Marketing Science (30:2), pp. 195–212.

Jabr, W., Mookerjee, R., Tan, Y., and Mookerjee, V. 2014. “Leveraging Philanthropic Behavior for

Customer Support: The Case of User Support Forums,” MIS Quarterly (38:1), pp. 187–208.

Jones, C., Hesterly, W., and Borgatti, S. 1997. “A General Theory of Network Governance: Exchange

Conditions and Social Mechanisms,” Academy of Management Review (22:4), pp. 911–945.

Karau, S., and Williams, K. 1993. “Social Loafing: A Meta-Analytic Review and Theoretical Integration.,”

Journal of Personality and Social Psychology (65:4), pp. 681–706.

King, G., and Zeng, L. 2001. “Logistic Regression in Rare Events Data,” Political Analysis (9), pp. 137–

163.

King, G., and Zeng, L. 2002. “Estimating Risk and Rate Levels, Ratios, and Differences in Case-Control

Studies,” Statistics in Medicine (21), pp. 1409–1427.

Lee, G. M., Qiu, L., and Whinston, A. B. 2016. “A Friend Like Me: Modeling Network Formation in a

Location-Based Social Network,” Journal of Management Information Systems (33:4), pp. 1008–

1033.

Lee, Y., Hosanagar, K., and Tan, Y. 2015. “Do I Follow My Friends or the Crowd? Information Cascades

in Online Movie Rating,” Management Science (61:9), pp. 2241–2258.

Levina, N., and Arriaga, M. 2014. “Distinction and Status Production on User-Generated Content

Platforms: Using Bourdieu’s Theory of Cultural Production to Understand Social Dynamics in Online

Fields,” Information Systems Research (25:3), pp. 468–488.

Liu, D., Brass, D., Lu, Y., and Chen, D. 2015. “Friendships in Online Peer-to-Peer Lending: Pipes, Prisms,

and Relational Herding,” MIS Quarterly (39:3), pp. 729–742.

Liu, J., Cao, Y., Lin, C., Huang, Y., and Zhou, M. 2007. “Low-Quality Product Review Detection in

Opinion Summarization,” in Computational Linguistics, pp. 334–342.

Lu, Y., Tsaparas, P., Ntoulas, A., and Polanyi, L. 2010. “Exploiting Social Context for Review Quality

Prediction,” in Proceedings of the 19th International Conference on World Wide Web, ACM, pp. 691–

700.

Luca, M. 2016. “Reviews, Reputation, and Revenue: The Case of Yelp. Com,” Harvard Business School

24

NOM Unit Working Paper.

Ma, X., Khansa, L., Deng, Y., and Kim, S. S. 2013. “Impact of Prior Reviews on the Subsequent Review

Process in Reputation Systems,” Journal of Management Information Systems (30:3), pp. 279–310.

Milinski, M., Semmann, D., and Krambeck, H. J. 2002. “Reputation Helps Solve the ‘Tragedy of the

Commons,’” Nature (415:6870), pp. 424–426.

Moe, and Schweidel. 2012. “Online Product Opinions: Incidence, Evaluation, and Evolution,” Marketing

Science (31:3), pp. 372–386.

Moretti, E. 2011. “Social Learning and Peer Effects in Consumption: Evidence from Movie Sales,” The

Review of Economic Studies (78:1), pp. 356–393.

Mudambi, S., and Schuff, D. 2010. “What Makes a Helpful Review? A Study of Customer Reviews on

Amazon. Com,” MIS Quarterly (34:1), pp. 185–200.

Otterbacher, J. 2009. “‘Helpfulness’ in Online Communities: A Measure of Message Quality,” SIGCHI

Conf. Human Factor Comput. Systems, pp. 955–964.

Pan, Y., and Zhang, J. Q. 2011. “Born Unequal: A Study of the Helpfulness of User-Generated Product

Reviews,” Journal of Retailing (87:4), pp. 598–612.

Qiu, L., Shi, Z., and Whinston, A. 2018. “Learning from Your Friends ’ Check-Ins : An Empirical Study of

Location-Based Social Networks,” Information Systems Research (29:4), pp. 1044–1061.

ReviewTrackers. 2018. “2018 ReviewTrackers Online Reviews Survey.”

(https://www.reviewtrackers.com/reports/online-reviews-survey).

Ridings, C. M., and Gefen, D. 2006. “Virtual Community Attraction: Why People Hang Out Online,”

Journal of Computer-Mediated Communication (10:1).

Roberts, G. 1998. “Competitive Altruism: From Reciprocity to the Handicap Principle,” Proceedings of the

Royal Society B: Biological Sciences (265:1394), pp. 427–431.

Shang, J., and Croson, R. 2009. “A Field Experiment in Charitable Contribution: The Impact of Social

Information on the Voluntary Provision of Public Goods,” The Economic Journal (119:540), Wiley

Online Library, pp. 1422–1439.

Sridhar, S., and Srinivasan, R. 2012. “Social Influence Effects in Online Product Ratings,” Journal of

Marketing (76:5), pp. 70–88.

Stephen, A., Bart, Y., and Plessis, C. Du. 2012. “Does Paying For Online Product Reviews Pay Off? The

Effects of Monetary Incentives on Content Creators and Consumers,” NA-Advances in Consumer

Research (40), pp. 228–231.

Tsvetkova, M., and Macy, M. W. 2014. “The Social Contagion of Generosity,” PLoS ONE (9:2).

Tucker, C., and Zhang, J. 2007. “Long Tail or Steep Tail? A Field Investigation into How Online Popularity

Information Affects the Distribution of Customer Choices (Working Paper).”

Underwood, H., and Findlay, B. 2004. “Internet Relationships and Their Impact on Primary Relationships,”

Behaviour Change (21:02), pp. 127–140.

Vesterlund, L. 2003. “The Informational Value of Sequential Fundraising,” Journal of Public Economics

(87:3–4), pp. 627–657.

Vesterlund, L. 2006. “Why Do People Give,” The Nonprofit Sector: A Research Handbook (2

nd

ed.), (W.

W. Powell and R. S. Steinberg, eds.), Yale University Press.

Wang, A., Zhang, M., and Hann, I. 2018. “Socially Nudged: A Quasi-Experimental Study of Friends’ Social

Influence in Online Product Ratings,” Information Systems Research (29:3), pp. 641–655.

Wang, Y., Goes, P., Wei, Z., and Zeng, D. 2017. “Production of Online Word-Of-Mouth: Peer Effects and

the Moderation of User Characteristics (Working Paper).”

Wang, Z. 2010. “Anonymity, Social Image, and the Competition for Volunteers: A Case Study of the Online

Market for Reviews,” The B.E. Journal of Economic Analysis & Policy (10:1), pp. 1–34.

Wasko, M. M., and Faraj, S. 2005. “Why Should I Share? Examining Social Capital and Knowledge

25

Contribution in Electronic Networks of Practice,” MIS Quarterly (29:1), pp. 35–57.

Wasko, M. M., Teigland, R., and Faraj, S. 2009. “The Provision of Online Public Goods: Examining Social

Structure in an Electronic Network of Practice,” Decision Support Systems (47:3), Elsevier B.V., pp.

254–265.

Willer, R. 2009. “Groups Reward Individual Sacrifice: The Status Solution to the Collective Action

Problem,” American Sociological Review (74:1), pp. 23–43.

Witty, C. J., Urla, J., Leslie, L. M., Snyder, M., Glomb, T. M., Carman, K. G., Rodell, J. B., Agypt, B.,

Christensen, R. K., and Nesbit, R. 2013. “Social Influences and the Private Provision of Public Goods :

Evidence from Charitable Contributions in the Workplace,” Stanford Institute for Economic Policy

Research (41:5), pp. 49–62.

Wu, C., Che, H., Chan, T., and Lu, X. 2015. “The Economic Value of Online Reviews,” Marketing Science

(34:5), pp. 739–754.

Xia, M., Huang, Y., Duan, W., and Whinston, A. B. 2011. “To Continue Sharing or Not to Continue

Sharing ? – An Empirical Analysis of User Decision in Peer-to-Peer Sharing Networks,” Information

Systems Research (23:1), pp. 1–13.

Yelp, I. 2011. “Yelp and the ‘1/9/90 Rule.’” (https://www.yelpblog.com/2011/06/yelp-and-the-1990-rule).

Yin, D., Bond, S., and Zhang, H. 2014. “Anxious or Angry? Effects of Discrete Emotions on the Perceived

Helpfulness of Online Reviews,” MIS Quarterly (38:2), pp. 539–560.

Zeng, X., and Wei, L. 2013. “Social Ties and User Content Generation: Evidence from Flickr,” Information

Systems Research (24:1), pp. 71–87.

Zhang, J., Liu, Y., and Chen, Y. 2015. “Social Learning in Networks of Friends versus Strangers,”

Marketing Science (34:4), pp. 573–589.

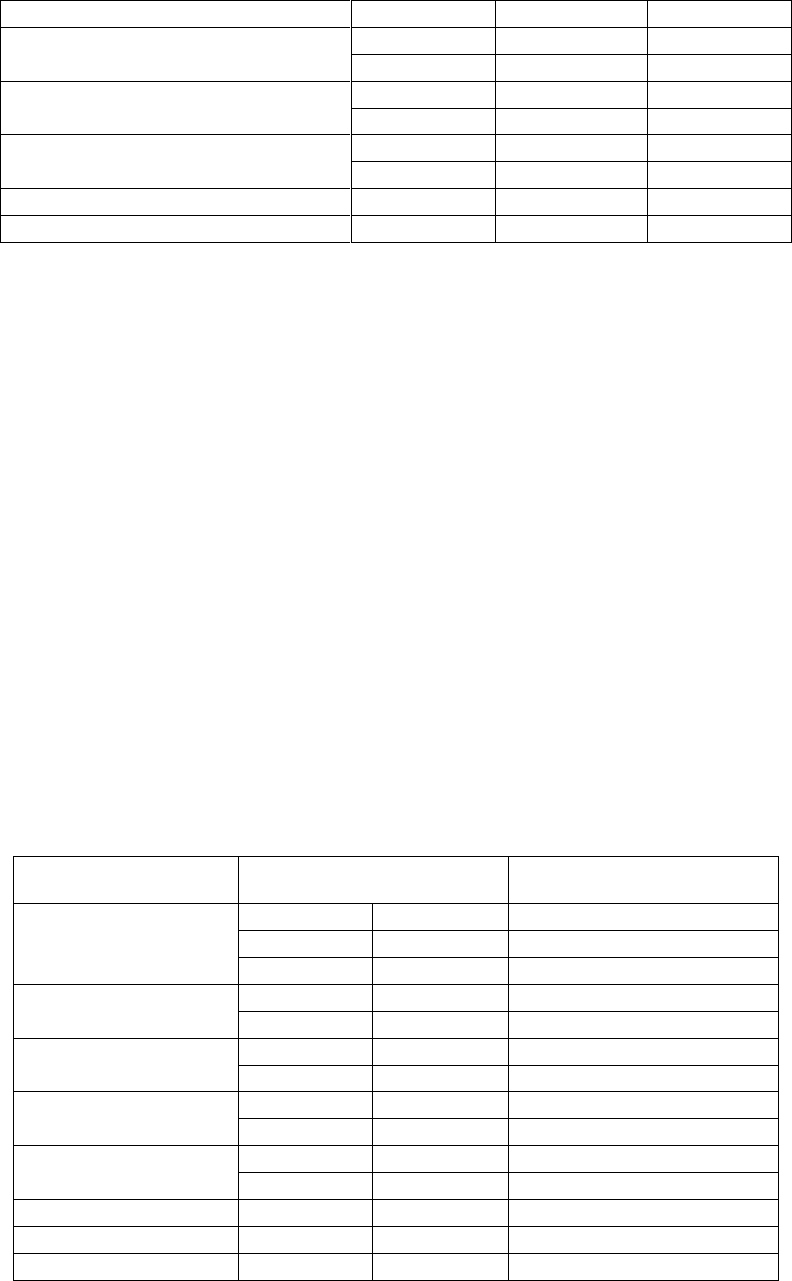

Table 1: Descriptive Statistics of Variables (N = 3,663,479)

Variables

Definition

Mean

Std.

Dev

Min

Max

Review

ijt

Whether user i writes a review on restaurant j

in period t: yes 1; otherwise 0

0.01

0.07

0.00

1.00

CurFrndReviews

i,j,t-1

# current-friend reviews of user i on

restaurant j in period t-1

0.00

0.03

0.00

5.00

FutFrndReviews

i,j,t-1

# future-friend reviews of user i on restaurant

j in period t-1

0.00

0.02

0.00

5.00

NewReviews

j,t-1

# new reviews on restaurant j in period t-1

0.42

1.06

0.00

38.00

Log#Compliments

i,t-1

Log # of compliments sent and received by

user i in period t-1

0.11

0.43

0.00

5.38

#SelfReviews

i,t-1

# of reviews written by user i in period t-1

0.21

0.90

0.00

42.00

Log#CumSelfReview

i,t-1

Log # cumulative reviews by user i up to

period t-1

3.99

1.35

0.00

7.37

LogTenure

i,t-1

Log days elapsed since user i registered on

Yelp up to period t-1

7.09

0.52

3.85

8.03

Log#Friends

i,t-1

Log (1+ # friends of user i in period t-1)

3.52

1.08

1.10

7.00

Elite

i

Whether user i is an elite user

0.36

0.48

0.00

1.00

Female

i

Whether user i is female

0.45

0.50

0.00

1.00

CityIncome

i

Median household income (thousands of

dollars) of the city user i lives

69.37

13.72

24.49

192.25

Dist

i,j

Miles between restaurant j and the city where

user i lives

50.35

66.57

0.00

439.94

AvgRatingRestaurant

j,t-1

Cumulative average rating of restaurant j up

to period t-1

3.59

0.69

0.50

5.00

26

AvgVariRestaurant

j,t-1

Variance of cumulative ratings of restaurant j

up to period t-1

1.07

0.30

0.00

2.00

Log#CumReviews

j,t-1

Log # cumulative reviews of restaurant j up

to period t-1

3.42

1.24

0.00

7.85

Promoted

j,t-1

Whether restaurant j is promoted in period t-1

0.00

0.02

0.00

1.00

Claimed

j,t-1

Whether restaurant j's business page on Yelp

is claimed in period t-1

0.66

0.47

0.00

1.00

Price

j

Price range of restaurant j: 1 - least

expensive; 4 - most expensive

1.62

0.56

1.00

4.00

We omit the summary statistics of 8-month dummies and 16 restaurant-category dummies for brevity.

Table 2. Effect of Friend Contributions on Review Quantity – Discrete-time Hazard Models

Independent Variables

ReLogit-1

ReLogit-2

ReLogit-3

Logit-3

Restaurant

Popularity

User

Experience

OR (SE)

CurFrndReviews

i,j,t-1

2.949***

2.950***

2.943***

20.338***

9.122***

(0.240)

(0.238)

(0.238)

(8.283)

(3.790)

NewReviews

j,t-1

1.095***

1.095***

1.095***

1.094***

1.095***

(0.004)

(0.004)

(0.004)

(0.004)

(0.004)

CurFrndReviews

i,j,t-1

*Log#CumReviews

j,t-1

0.667***

(0.053)

CurFrndReviews

i,j,t-1

*Log#CumSelfReview

j,t-1

0.814**

-0.063

FutFrndReviews

i,j,t-1

1.870***

1.843***

1.861***

1.831***

(0.267)

(0.263)

(0.265)

(0.262)

Log#Compliments

i,t-1

1.355***

1.344***

1.341***

1.341***

1.338***

1.342***

(0.016)

(0.016)

(0.016)

(0.016)

(0.016)

(0.016)

#SelfReviews

i,t-1

1.120***

1.121***

1.120***

1.120***

1.120***

1.120***

(0.004)

(0.004)

(0.004)

(0.004)

(0.004)

(0.004)

Log#CumSelfReview

i,t-1

1.456***

1.451***

1.451***

1.451***

1.451***

1.454***

(0.015)

(0.015)

(0.015)

(0.015)

(0.015)

(0.015)

LogTenure

i,t-1

0.722***

0.718***

0.718***

0.718***

0.718***

0.718***

(0.011)

(0.011)

(0.011)

(0.011)

(0.011)

(0.011)

Log#Friends

i,t-1

0.912***

0.913***

0.913***

0.913***

0.914***

0.912***

(0.008)

(0.008)

(0.008)

(0.008)

(0.008)

(0.008)

Elite

i

2.001***

1.989***

1.989***

1.989***

1.990***

1.986***

(0.039)

(0.039)

(0.039)

(0.039)

(0.039)

(0.039)

Female

i

0.980

0.972+

0.972+

0.972+

0.973+

0.972+

(0.015)

(0.015)

(0.015)

(0.015)

(0.015)

(0.015)

CityIncome

i

1.001+

1.001*

1.001*

1.001*

1.001*

1.001*

(0.001)

(0.001)

(0.001)

(0.001)

(0.001)

(0.001)

Dist

i,j

0.983***

0.982***

0.982***

0.982***

0.982***

0.982***

(0.001)

(0.001)

(0.001)

(0.001)

(0.001)

(0.001)

AvgRatingRestaurant

j,t-1

1.216***

1.197***

1.197***

1.197***

1.196***

1.196***

(0.022)

(0.021)

(0.021)

(0.021)

(0.021)

(0.021)

AvgVariRestaurant

j,t-1

0.631***

0.702***

0.703***

0.703***

0.698***

0.704***

(0.025)

(0.028)

(0.028)

(0.028)

(0.028)

(0.028)

Log#CumReviews

j,t-1

1.731***

1.584***

1.583***

1.583***

1.594***

1.582***

27

(0.014)

(0.014)

(0.014)

(0.014)

(0.014)

(0.014)

Promoted

j,t-1

2.913***

2.725***

2.727***

2.700***

2.693***

2.699***

(0.434)

(0.407)

(0.407)

(0.403)

(0.402)

(0.403)

Claimed

j,t-1

1.110***

1.119***

1.120***

1.120***

1.119***

1.120***

(0.021)

(0.021)

(0.021)

(0.021)

(0.021)

(0.021)

Price

j

1.251***

1.266***

1.266***

1.266***

1.263***

1.266***

(0.018)

(0.018)

(0.018)

(0.018)

(0.018)

(0.018)

Constant

0.000***

0.000***

0.000***

0.000***

0.000***

0.000***

(0.000)

(0.000)

(0.000)

(0.000)

(0.000)

(0.000)

Month & Restaurant

category dummies

included

included

included

included

included

included

Log-Likelihood

-200,055

-199,524

-199,514

-199,514

-199,476

-199,509

Pseudo R-squared

0.075

0.077

0.077

0.077

0.077

0.077

N

3,663,479

3,663,479

3,663,479

3,663,479

3,663,479

3,663,479

DV = whether user i reviews restaurant j in period t (Review

ijt

). The values in parentheses are standard errors. +p<0.10,

*p<0.05, **p<0.01, ***p<0.001.

Table 3. Effect of Friend Contributions on Review Quality – Fixed-Effect OLS

DV = Log Combined

Votes Received

(LogCombinedVotes)

DV = Log Useful Votes

Received

(LogUsefulVotes)

DV = Novelty

(Novelty)

Independent Variables

M1

M2

M3

M 4

M5

M6

Coefficient

Coefficient

Coefficient

Coefficient

Coefficient

Coefficient

(SE)

(SE)

(SE)

(SE)

(SE)

(SE)

CurFrndReviews

i,j,t-1

-

0.1084*

-

0.0928*

-

0.000795**

-

(0.0484)

-

(0.0388)

-

(0.000293)

NewReviews

j,t-1

-

0.0080*

-

0.0063*

-

-0.000137***

-

(0.0034)

-

(0.0027)

-

(0.000036)

ReviewAge

i,j,t

-0.0005

-0.0006

-0.0004

-0.0004

-

-

(0.0008)

(0.0008)

(0.0007)

(0.0007)

-

-

Log#Compliments

i,t-1

0.0432**

0.0428**

0.0334**

0.0331**

0.000005

0.000001

(0.0159)

(0.0159)

(0.0128)

(0.0128)

(0.000119)

(0.000120)

#SelfReviews

i,t-1

0.0063+

0.0062+